Going of LSTM documentation: https://pytorch.org/docs/stable/generated/torch.nn.LSTM.html#torch.nn.LSTM

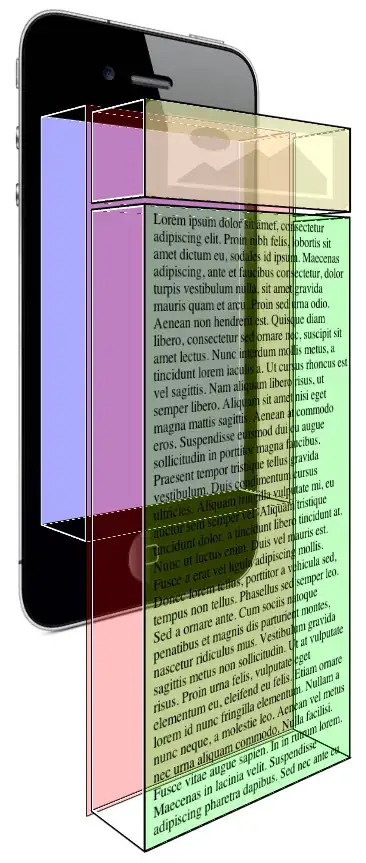

I will be referring to this picture for the rest of my post:

I think I understand what input_size is:

input_size - the number of input features per time-step:

So if the input is a vector, maybe a word embedding. (Represented as X_t in the baove piture)

What is is hidden_size?

According to this stack overflow post:

https://stackoverflow.com/a/50848068/14382150

hidden_size is referred to as number of nodes in 1 LSTM cell. BUt he does not explain very well what he means by node.

Can someone please give a clear explanation of what hidden_size is referring to? Is it just the number of time steps the LSTM block unrolled?

Also what size should it be? Is it like a hyperparameter, similar to defining number of neurons in a fully connected neural network.

I have looked at multiple posts and videos but I cant seem to understand this. Any help is appreciated