I use scrapy and scrapyd and send some custom settings via api (with Postman software).

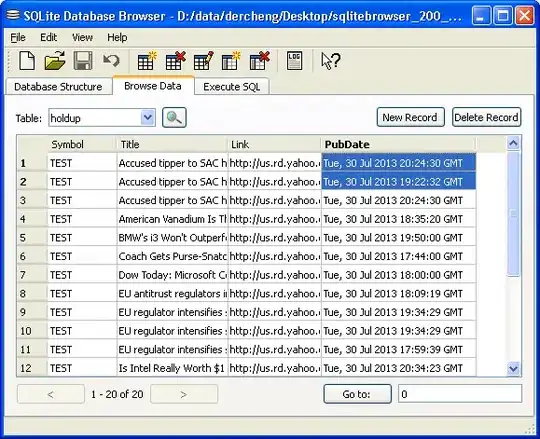

Photo of the request:

For example, I send the value of start_urls through api and it works correctly.

Now the problem is that I cannot apply the settings that I send through the api in my crawl.

For example, I send the CONCURRENT_REQUESTS value, but it is not applied.

If we can bring self in the update_settings function, the problem will be solved, but an error will occur.

My code:

from scrapy.spiders import CrawlSpider, Rule

from scrapy.loader import ItemLoader

from kavoush.lxmlhtml import LxmlLinkExtractor as LinkExtractor

from kavoush.items import PageLevelItem

my_settings = {}

class PageSpider(CrawlSpider):

name = 'github'

def __init__(self, *args, **kwargs):

self.start_urls = kwargs.get('host_name')

self.allowed_domains = [self.start_urls]

my_settings['CONCURRENT_REQUESTS']= int(kwargs.get('num_con_req'))

self.logger.info(f'CONCURRENT_REQUESTS? {my_settings}')

self.rules = (

Rule(LinkExtractor(allow=(self.start_urls),deny=('\.webp'),unique=True),

callback='parse',

follow=True),

)

super(PageSpider, self).__init__(*args, **kwargs)

#custom_settings = {

# 'CONCURRENT_REQUESTS': 4,

#}

@classmethod

def update_settings(cls, settings):

cls.custom_settings.update(my_settings)

settings.setdict(cls.custom_settings or {}, priority='spider')

def parse(self,response):

loader = ItemLoader(item=PageLevelItem(), response=response)

loader.add_xpath('page_source_html_lang', "//html/@lang")

yield loader.load_item()

def errback_domain(self, failure):

self.logger.error(repr(failure))

Expectation:

How can I change the settings through api and Postman?

I brought CONCURRENT_REQUESTS settings as an example in the above example, in some cases up to 10 settings may need to be changed through api.

Update:

If we remove my_settings = {} and update_settings and the commands are as follows, an error occurs (KeyError: 'CONCURRENT_REQUESTS') when running scrapyd-deploy because CONCURRENT_REQUESTS does not have a value at that moment.

Part of the above scenario code:

class PageSpider(CrawlSpider):

name = 'github'

def __init__(self, *args, **kwargs):

self.start_urls = kwargs.get('host_name')

self.allowed_domains = [self.start_urls]

my_settings['CONCURRENT_REQUESTS']= int(kwargs.get('num_con_req'))

self.logger.info(f'CONCURRENT_REQUESTS? {my_settings}')

self.rules = (

Rule(LinkExtractor(allow=(self.start_urls),deny=('\.webp'),unique=True),

callback='parse',

follow=True),

)

super(PageSpider, self).__init__(*args, **kwargs)

custom_settings = {

'CONCURRENT_REQUESTS': my_settings['CONCURRENT_REQUESTS'],

}

thanks to everyone