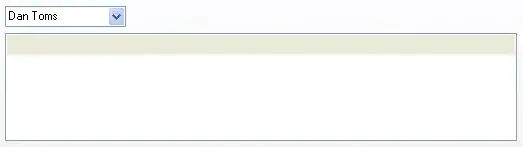

I am using spark 3.2.1 to summarise high volume data using joins. Spark's plan shows that 1 executor was tasked with 90GB of data to process after Spark's AEQShuffleRead step as shown below. Also the shuffle partition of 900 was drastically brought down to 8.

I tried spark.sql.adaptive.coalescePartitions.enabled = false. Below is the plan

I thought the row_number() was causing the issue. But removing it still had a similar plan with AQE

, row_number() over (partition by payments_payment_reference, payments_payment_id, payments_payment_refund_reference,sale_or_refund

order by payments_payment_id,payments_payment_refund_reference, create_date_ymd) payments_fee_filter

The job writes a summary - so the output file size is 180MB in parquet. So, I am doing a repartition on the final step in order to be left with single file output.

Why is spark behaving like this? How can I overcome and distribute the load?