I have the following piece of code and able to run as a DLT pipeline successfully

@dlt.table(

name = source_table

)

def source_ds():

return spark.table(f"{raw_db_name}.{source_table}")

### Create the target table definition

dlt.create_streaming_live_table(name=target_table,

comment= f"Clean, merged {target_table}",

#partition_cols=["topic"],

table_properties={

"quality": "silver"

}

)

If I try to view the history using time travel, am getting the error: For eg.,

describe history my_db.employee_trasaction

Error:

AnalysisException: Cannot describe the history of a view.

I need to also create a sync process so as to have these ables in Unity Catalog and am referring to this document (https://www.databricks.com/blog/2022/11/03/how-seamlessly-upgrade-your-hive-metastore-objects-unity-catalog-metastore-using).

When I checked table properties, the type is shown as VIEW?

How do I make these tables as DELTA so they are available for time travel, and for unity catalog

When I tried sync command for EXTERNAL TABLES or VIEWS, am getting errors as VIEWS not supported

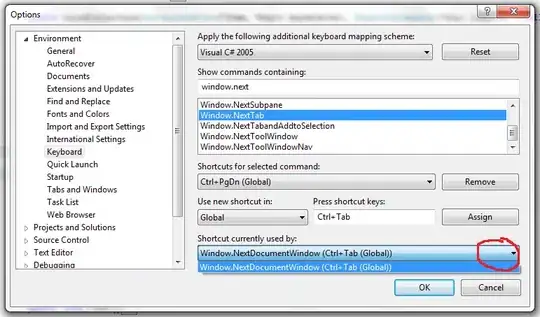

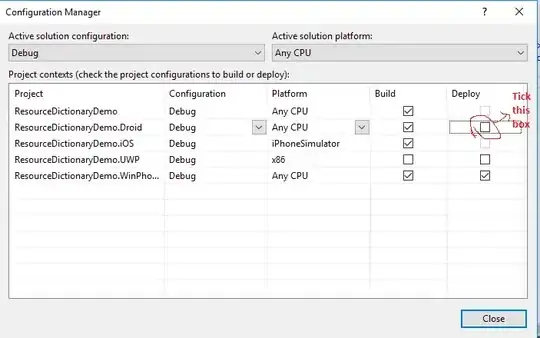

Screen shot for a sync command VIEW table: