I have an Image Captioning dataset, where each sample is composed by an image and a list of captions.

- Each sample has one or more captions

- The number of captions can be different for each sample.

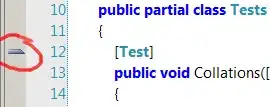

Here's a visual example:

I am using PyTorch and I have created a custom Dataset and Dataloader to train the models and perform evaluation.

- For training I randomly choose a caption between the list of available captions, then calculate the model output and the NLL loss between the model's output and the target.

- For evaluation, I want to calculate the loss between the model and a sampled caption, but also other metrics used in Text Generation tasks, such as BLEU and ROUGE. Those metrics accept multiple references, so I want to pass the list of all the available captions for each sample.

What is the best way to make the Dataset and Dataloader handle both of these cases, i.e provide one randomly chosen label for training and all the labels for multi-reference metrics?

- I tried adding a flag to the Dataset class, which would be set to true during validation and test. However, since each sample has a varying number of labels, the DataLoader cannot build the batch. One solution could be to directly iterate on the Dataset, but I think there must be a better solution.

from torch.utils.data import Dataset, DataLoader

class MyDataset(Dataset):

def __init__(self, root, split, image_transform, processor):

file = pl.Path(root) / '{}.json'.format(split)

with open(file) as f:

j = json.load(f)

self.data = list(j.values())

self.split = split

self.image_transform = image_transform

self.processor = processor

def __getitem__(self, i):

image_path = self.data[i]['img_url']

image = Image.open(image_path).convert('RGB')

# randomly sample one visual sentence

labels = self.data[i]['visual_sentences']

if self.image_transform is not None:

image = self.image_transform(image)

encoding = self.processor(images=image, text=random.sample(labels, 1), padding="max_length", return_tensors="pt")

# remove batch dimension

encoding = {k: v.squeeze() for k, v in encoding.items()}

# add all the labels if not in training

if self.split != 'train':

encoding['labels'] = labels

return encoding

class MyDataLoader(BaseDataLoader):

def __init__(self, data_dir, batch_size, split, shuffle=True, validation_split=0.0, num_workers=1, processor=None):

transform = transforms.Compose([

transforms.Resize((224, 224))

])

processor = AutoProcessor.from_pretrained(processor)

self.data_dir = data_dir

self.dataset = MyDataset(data_dir, split, image_transform=transform, processor=processor)

super().__init__(self.dataset, batch_size, shuffle, validation_split, num_workers)

- Do I have to manually "pad" the lists, e.g by adding empty strings in order to have all lists of equal length (and then remove those empty strings while calculating the metrics, I suppose)? Are there other solutions?