I think @Ethen.S answer is not correct. Moreover neither (a) nor (b) from original question are true:

You might want to take a look at Azure.Messaging.ServiceBus.ServiceBusProcessor (use StartProcessingAsync method as an entry point for investigation) source to better understand the dichotomy there. It exposes an event that you subscribe to and handle the messages "reactively", but it still does a sequence of the ReceiveMessageAsync for you. What might look "push" model from the consumer perspective might still rely on "poll" model behind the scenes.

Please also note that there is a concept of Message Prefetching which can optimise some of the consumption scenarios, but it has its caveats to be considered as well (see https://learn.microsoft.com/en-us/azure/service-bus-messaging/service-bus-prefetch?tabs=dotnet#why-is-prefetch-not-the-default-option). It is still the same polling strategy, but with an additional client-level buffer on top.

UPDATE. I dug deeper into that to capture what happens on wire.

I've used sslsplit in order to make MITM attack to capture TLS-protected AMQP connection decrypted data. Test application setup is simple:

- Receiver is doing

ReceiveMessageAsync in a loop

- After some time sender sends a message (two times)

In summary, this is indeed neither "poll" nor "push" as definitions are rather vague. What we can say for sure is that:

- it uses persistent TCP connection

- receival is controlled by the Client side using "Link Credit" (via the "flow" performative)

- when client is idle waiting for messages once in about 30 seconds empty AMQP message is sent

- Service Bus has mechanism to notify the receiver efficiently if there are enough "Link Credit"

I've put test code and test harness here: https://github.com/gubenkoved/amqp-test.

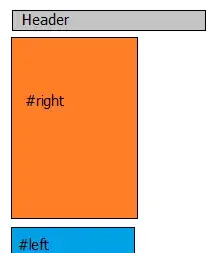

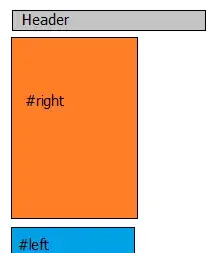

Below is the capture results, I've colored sender/receiver TCP streams as red/green.