I'd like to use JS function in BigQuery when checking if file exists in Google Cloud Storage. But BigQuery kept showing error when **select UDF_FUNCTION_NAME() **

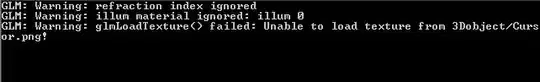

ReferenceError: require is not defined at

My JS code is like this.

BUCKET PATH is gs://MY_BUCKET_PATH/FILE_NAME This function is checking if the file exists in the path.

function FileExist(){

const {Storage} = require('@google-cloud/storage');

const storage=new Storage();

return (storage.bucket('MY_BUCKET_PATH').file('FILE_NAME').exists());

}

And my UDF is like this.

My_Function ()

RETURNS BOOL

LANGUAGE js

OPTIONS (

library=["gs://MY_BUCKET_PATH/FILE_NAME"]

)

AS r"""

return FileExist();

""";

How can I make this bigquery UDF use JS in google cloud storage?

I tried put JS code in udf but it didn't work because there is npm library problem I think. So now I'm trying to use function in library.