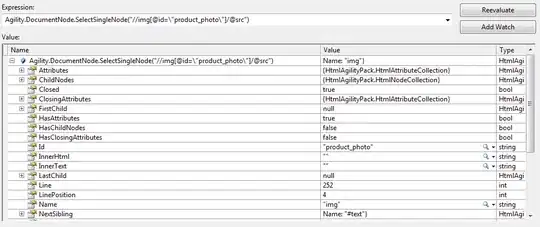

This is the table I want to transpose

I created a list of the distinct values at DESC_INFO using this:

columnsToPivot = list(dict.fromkeys(df.filter(F.col("DESC_INFO") != '').rdd.map(lambda x: (x.DESC_INFO, x.RAW_INFO)).collect()))

And then I tried to map the RAW_INFO values into the matching columns with this:

for key in columnsToPivot:

if key[1] != '':

df = df.withColumn(key[0], F.lit(key[1]))

It happens that I just wrote all rows with the same value when I want to fill the RAW_INFO where the table matcher the values mapped with the same 'PROCESS', 'SUBPROCESS' AND 'LAYER'.

This is the map I expect at the end.

The blue lines mean the transpose I have already achieved. The red lines mean the data I need to fill matching the condition shadowed in yellow.