I created one speech resource on my Azure Portal:-

Visit your Azure Portal > Create a resource > Search for Speech and Click on Create, I have created a speech service with Standard S0 Tier, You can create it with Free Tier F0 too.

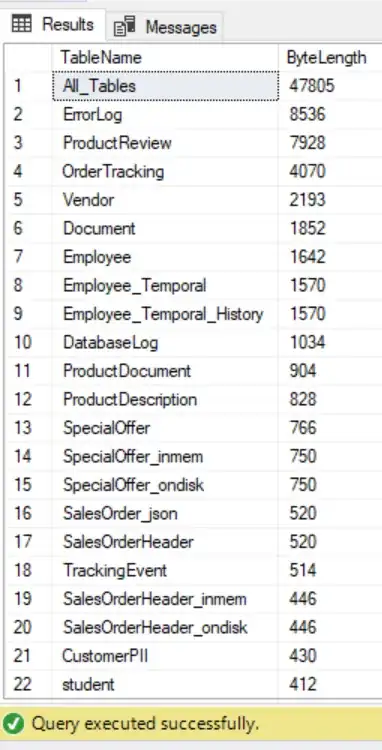

Now, Visit Keys and endpoints in your Speech service left pane > Under Resource Management > Copy the one of the Keys from Key1 and Key2 and also copy the Location region and save it as an environment variable in your terminal from the VS code like below:-

Keys and Endpoint of Speech resource:-

Set SPEECH_KEY and SPEECH_REGION in your terminal to set it as an environment variable like below:-

setx SPEECH_KEY your-key

setx SPEECH_REGION your-region

Method 1) Convert speech to text with your local machine's Microphone, You can use this to integrate with your real time audio:-

import os

import azure.cognitiveservices.speech as speechsdk

def recognize_from_microphone():

# This example requires environment variables named "SPEECH_KEY" and "SPEECH_REGION"

speech_config = speechsdk.SpeechConfig(subscription=os.environ.get('SPEECH_KEY'), region=os.environ.get('SPEECH_REGION'))

speech_config.speech_recognition_language="en-US"

audio_config = speechsdk.audio.AudioConfig(use_default_microphone=True)

speech_recognizer = speechsdk.SpeechRecognizer(speech_config=speech_config, audio_config=audio_config)

print("Speak into your microphone.")

speech_recognition_result = speech_recognizer.recognize_once_async().get()

if speech_recognition_result.reason == speechsdk.ResultReason.RecognizedSpeech:

print("Recognized: {}".format(speech_recognition_result.text))

elif speech_recognition_result.reason == speechsdk.ResultReason.NoMatch:

print("No speech could be recognized: {}".format(speech_recognition_result.no_match_details))

elif speech_recognition_result.reason == speechsdk.ResultReason.Canceled:

cancellation_details = speech_recognition_result.cancellation_details

print("Speech Recognition canceled: {}".format(cancellation_details.reason))

if cancellation_details.reason == speechsdk.CancellationReason.Error:

print("Error details: {}".format(cancellation_details.error_details))

print("Did you set the speech resource key and region values?")

recognize_from_microphone()

Output :-

It will first prompt for Speak into your microphone, You speak a sentence and it will be converted into text like below:-

Method 2) Using audio File to convert to text :-

I have added one audio.wav file in the same folder as my code ad added it in audio_config = speechsdk.audio.AudioConfig(filename="audio.wav") Ran the code and got the output from my audio file in the text format below:-

import os

import azure.cognitiveservices.speech as speechsdk

def recognize_from_microphone():

# This example requires environment variables named "SPEECH_KEY" and "SPEECH_REGION"

speech_config = speechsdk.SpeechConfig(subscription=os.environ.get('SPEECH_KEY'), region=os.environ.get('SPEECH_REGION'))

speech_config.speech_recognition_language="en-US"

audio_config = speechsdk.audio.AudioConfig(filename="audio.wav")

speech_recognizer = speechsdk.SpeechRecognizer(speech_config=speech_config, audio_config=audio_config)

speech_recognition_result = speech_recognizer.recognize_once_async().get()

if speech_recognition_result.reason == speechsdk.ResultReason.RecognizedSpeech:

print("Recognized: {}".format(speech_recognition_result.text))

elif speech_recognition_result.reason == speechsdk.ResultReason.NoMatch:

print("No speech could be recognized: {}".format(speech_recognition_result.no_match_details))

elif speech_recognition_result.reason == speechsdk.ResultReason.Canceled:

cancellation_details = speech_recognition_result.cancellation_details

print("Speech Recognition canceled: {}".format(cancellation_details.reason))

if cancellation_details.reason == speechsdk.CancellationReason.Error:

print("Error details: {}".format(cancellation_details.error_details))

print("Did you set the speech resource key and region values?")

recognize_from_microphone()

Output:-

By default only Mp3 and wav 16Khz or 8Hz, 16 Bit mono PCM audio file types are supported, But you can refer below supported formats via G-streamer

MP3, OPUS/OGG, FLAC, ALAW in WAV container, MULAW in WAV container, ANY for MP4 container or unknown media format.

Reference:-

Speech-to-text quickstart - Speech service - Azure Cognitive Services | Microsoft Learn

How to use compressed input audio - Speech service - Azure Cognitive Services | Microsoft Learn