What is the appropriate procedure to be followed to examine the efficiency or to identify limitations of the FEC algorithm, which is currently implemented in the Unet audio?

I wanted to analyze how efficiently the current FEC algorithm in the Unet audio works, specifically how many bits of errors it can correct and what its limitations are.

For that, I have tried to use BER (Bit Error Rate) as a metric to check the performance of FEC under various situations using the count of errors in the received data from the BER value.

I have used two laptops for this experiment as there is a very minimal probability of getting errors using a single laptop. I was able to observe the errors in the received data (using BER) when phy[].fec was set to zero, and when I turned on the FEC, it resolved all those errors (BER = 0/144). Then, I introduced some ambient noise by playing music while transmitting data so that it would interfere with the actual data and add some noise to it, simulating a realistic underwater scenario. This resulted in a lot of errors in the received data even after applying FEC.

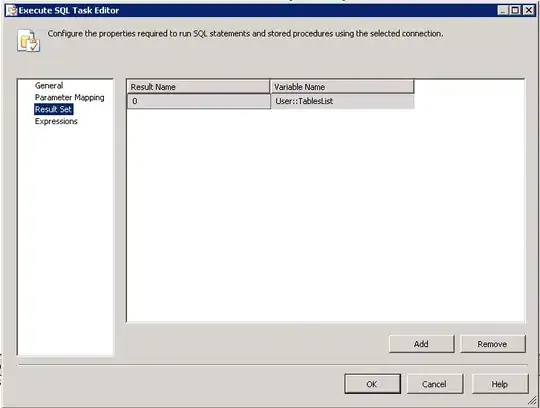

Here are the screenshots of the two Unet audio shells used for data transmission. In this case, a standard test frame is transmitted to compute the BER for the frame (phy[].test is set to true):

The first TxFrameReq is sent without any noise, and the later one is sent in the presence of some noise.

As depicted in the images above, when some noise is added during data transmission, errors occur in the received message, and data is corrupted even though the FEC is set on.

So, I would like to know if the above method is an appropriate and justified one to analyze the efficiency and get the limitations of the FEC in the Unet audio. If otherwise, it would be really helpful if you could suggest a method to do the same.