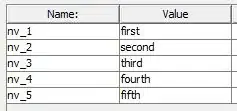

I'm trying to extract table from a pdf that had a lot of name of media sources. The desired output is a comprehensive csv file with a column with all the sources listed.

I'm trying to write a simple python script to extract table data from a pdf. The output I was able to reach is a CSV for every table that I try to combine. Then I use the concat function to merge all the files.

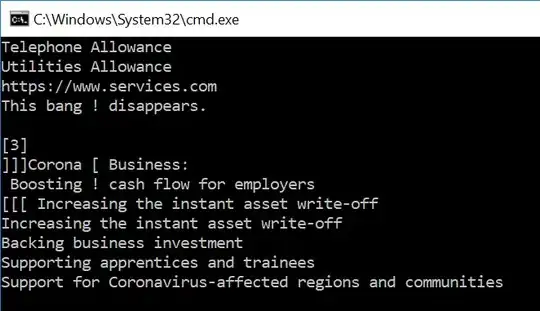

The result is messy, I have redundant punctuation and a lot of spaces in the file.

Can somebody help me reach a better result?

Code:

from camelot import read_pdf

import glob

import os

import pandas as pd

import numpy as np

extension = 'csv'

all_filenames = [i for i in glob.glob('*.{}'.format(extension))]

# Get all the tables within the file

all_tables = read_pdf("/Users/zago/code/pdftext/pdftextvenv/mimesiweb.pdf", pages = 'all')

# Show the total number of tables in the file

print("Total number of table: {}".format(all_tables.n))

# print all the tables in the file

for t in range(all_tables.n):

print("Table n°{}".format(t))

print((all_tables[t].df).head())

#convert to excel or csv

#all_tables.export('table.xlsx', f="excel")

all_tables.export('table.csv', f="csv")

extension = 'csv'

all_filenames = [i for i in glob.glob('*.{}'.format(extension))]

#combine all files in the list

combined_csv = pd.concat([pd.read_csv(f,encoding = 'utf-8', sep=',') for f in all_filenames ])

#export to csv

combined_csv.to_csv("combined_csv_tables.csv", index=False, encoding="utf-8")

Thanks