I have a simple Flink/Kinesis Analytics application with two task slots: Source -> Transform, Repartition -> Sink. My application has 32 KPUs with a parallelism of 1, reading from a Kinesis Stream with 60 shards. After a transformation stage I key by a random digit between 1 and 32 in effort to redistribute work evenly to each subtask. However, I'm not seeing an even distribution of work across subtasks in either the source or the sink.

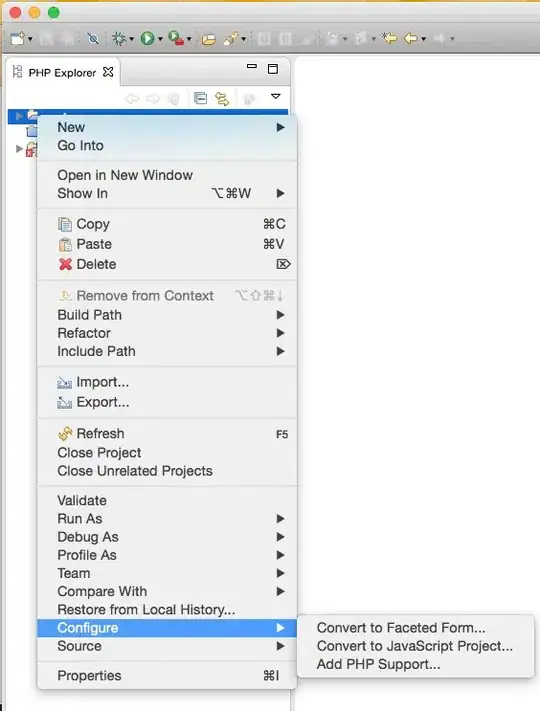

Here are the first ten subtasks in the first task slot (before repartition), out of 32, only 20 are reading data

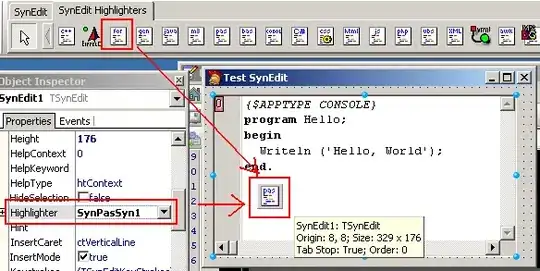

Here are the first ten subtasks in the second task slot (after repartition):

I've checked that all 60 shards on Kinesis are producing data. So that's not the problem. My two questions are:

- How to I both read data from a source evenly into all available subtasks?

- How do I force Flink to evenly assign key groups across subtaks?