I have an Azure Function App inside an App Service Plan - Elastic Premium. The Function App is inside a VNet and it is a durable function app with some functions triggered by non-HTTP triggers:

I noticed the the Service Plan is not able to scale out properly, indeed the number of minimum instances is 4 and maximum instances 100

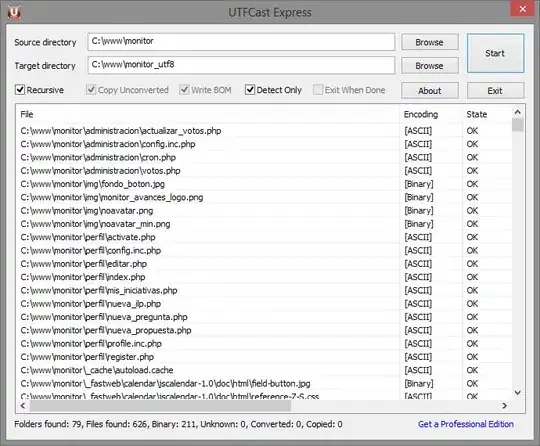

but checking the number of instances in the last 24 hours in:

"Function App -> Diagnose and solve problems -> Availability and performance -> HTTP Functions scaling"

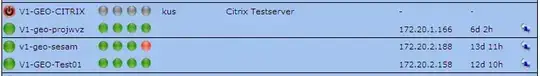

the number of instances is never higher than 5/6 instances:

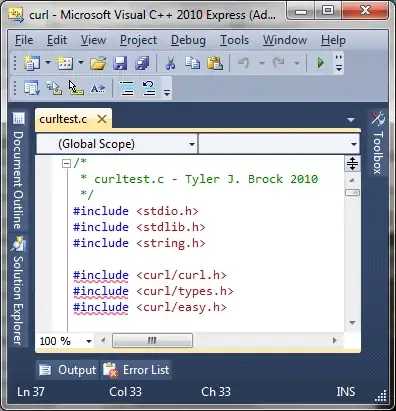

which is not good because if I check in:

"Function App -> Diagnose and solve problems -> Availability and performance -> Linux Load Average"

I have the following message:

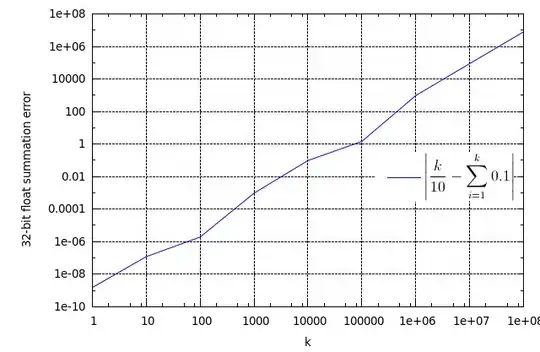

"High Load Average Detected. Shows Load Average information if system has Load Average > 4 times # of Cpu's in the timeframe. For more information see Additional Investigation notes below."

and also checking the CPU and memory usage from the metrics I have some high spikes

so this means that my App Service plan is not able to scale out properly. One of my colleague, suggested to verify that:

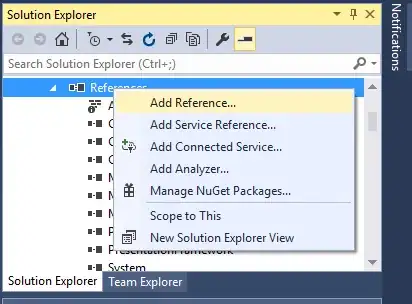

"Function App -> Configuration -> Function runtime settings -> Runtime Scale Monitoring"

because if it is set to "off", may be that the VNet blocks Azure from diagnostic our app and as a result, Azure is not spawning more instances because is not seeing in real-time what CPU Load is. But I didn't understand how this setting can help me to scale out. Do you know what the "Runtime Scale Monitoring" is used for and why this can help me to scale out?

And also, do you think is more a problem related to scaling up instead of scaling out?