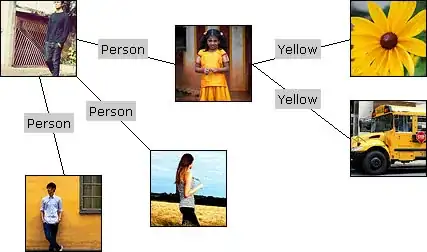

I am trying to train a bot in a game like curve fever. It is like a snake which moves with a really precise turn radius (not 90°), which makes random hole (where he can passes throw) and like for a snake game he dies if he goes out of map or hits himself. The difference in the points stands on the fact that the snake has to survive as long as possible and there is no food associated. The tail of the snail increases by 1 at every step. It looks like that:

So I use a deep q learning algorithm with a CNN network, inspired by: Flappy bird deep q learning, which is itself inspired by the DeepMind's paper Playing Atari with Deep Reinforcement Learning.

My images as input are a thresholding image like above where everything is black or white. At every step I grant +0.1 as reward for staying alive and -1 for dying in border of map or itself. I trained my agent for hours and after 4.000.000 iterations I result in an agent which almost never goes out of map but crashes on itself in a very fast way. So it is like he learnt how to not crash on border of the map but not on itself, what could explain this ?

My suppositions are:

- I took a replay memory size of 25000 instead of 50000 because of OOM error, is that enough?

- I did not train him long enough, but how could I know?

- The border of the map never changes so it is easy to learn from it, but the tail of the agent itself changes at every new game, should I get worse reward for crashing on itself so the agent takes it more into account?

I am requesting your help because it takes a lot of time to train my agent and I can't be sure of what should I do.

Thanks in advance for any help.