I am building a web app for a client who has ~1M rows of data in their MySQL database. The data looks approximately like this:

Products

+---------+-----------+---------+-------------+---------+----------+

| Id | Name | Size | Colour | 4 Other attributes |

+---------+-----------+---------+-------------+---------+----------+

| 1 | product 1 | S,M,L | red, green | ... other options |

| 2 | product 2 | XL | blue, green | ... other options |

| ................................................................ |

On the Products page in my app, there is:

- Sidebar column that holds filters (6 filter groups from the attributes above with their respective options)

- Main area where users see the product results (50 at a time with paging)

When the user clicks on a filter option (e.g. colour=blue), I a query to update the results in the main area (e.g. all products that have colour=blue).

What query can I perform to fetch the remaining filter options for the other filter groups?

For example, if the user selects colour=blue, that will reduce the number of options in other filter groups such as size (if you look at the sample data above, the only option for size that will remain will be XL).

I can't get the filter options from all results of the colour=blue query, because there are 100k+ of these and I only fetch 50 at a time.

Step 1: All options available.

Sizes

[ ] S

[ ] M

[ ] L

[ ] XL

Colours

[ ] red

[ ] green

[ ] blue

Step 2: User selects "blue", sizes options are updated.

Sizes

[ ] XL

Colours

[ ] red

[ ] green

[x] blue

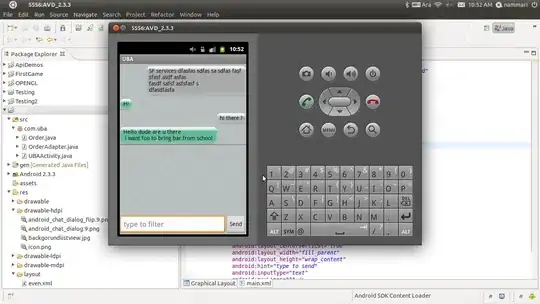

Here is an example from another site doing something similar. (This site also has millions of products listed.)

Step 1: Initial load, no filters selected.

Step 2: One of the filter options is selected. The results on the right update and the filter options in the other filter groups in the sidebar update too.