Unable to get object Pose and draw axis with 4 markers

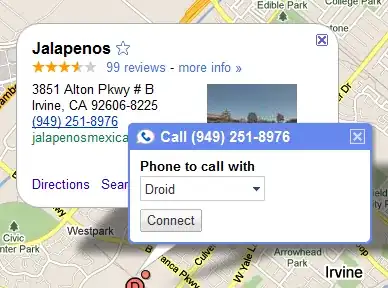

I am trying to get the object pose by following This tutorial for Pose Estimation. In the video the author uses chessboard pattern(24,17) and mentions in the comment that any object with markers(detectable) can be used to estimate the pose.

I am using this Object with only 4 circular markers I am able to detect the markers and get the (x,y) points(ImagePoints) and ObjectPoint with an arbitrary ref. I have my camera calibrated(CameraMatrix and Distortion Coefficients). Following the tutorial i am unable to draw Object Frame.

This is what i was able to do so far.

#(x,y) points of detected markers, another function processes the image and returns the 4 points

Points = [(x1,y1),(x2,y2),(x3,y3),(x4,y5)]

image_points = np.array([

Points[0],

Points[1],

Points[2],

Points[3]

],dtype=np.float32)

image_points = np.ascontiguousarray(image_points).reshape((4,1,2))

object_points = np.array([

(80,56,0),

(80,72,0),

(57,72,0),

(57,88,0)],

dtype=np.float32).reshape((4,1,3)) #Set Z as 0

axis = np.float32([[5,0,0], [0,5,0], [0,0,-5]]).reshape(-1,3)

imgpts, jac = cv2.projectPoints(axis, rotation_vector, translation_vector, mtx, dist)

What am i missing?

This is what i am trying to acheive. Goal

This is the current result

Current

Camera Distance from the object is fixed. I need to track Translation and Rotation in x and y.

EDIT:

Pixel Values of markers from Top-Left to bottom-right

Point_A = (1081, 544)

Point_B = (1090, 782)

Point_C = (824, 785) #Preferred Origin Point

Point_D = (826, 1050)

Camera Parameters

mtx: [[2.34613584e+03 0.00000000e+00 1.24680613e+03]

[0.00000000e+00 2.34637787e+03 1.11379469e+03]

[0.00000000e+00 0.00000000e+00 1.00000000e+00]]

dist:

[[-0.05595266 0.07570472 0.00200983 0.00073797 -0.30768105]]