I am trying to work on local explainability using Lime graph. Before building the model, I encode some of the categorical variables.

Sample Data and code:

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

from sklearn.model_selection import train_test_split

from sklearn.preprocessing import StandardScaler

from sklearn.linear_model import LogisticRegression

from sklearn.metrics import confusion_matrix

df = pd.DataFrame({'customer_id' : np.arange(1,21),

'gender' : np.random.choice(['male','female'], 20),

'age' : np.random.randint(19,50, 20),

'salary' : np.random.randint(20000,95000, 20),

'purchased' : np.random.choice([0,1], 20, p = [.8,.2])})

Preprocessing:

df['gender'] = df['gender'].map({'female' : 0, 'male' : 1})

df['age'] = df['age'].map(lambda x : 'young' if x<=35 else 'middle aged')

df['age'] = df['age'].map({'young' : 0, 'middle aged' : 1})

bins = [0, df['salary'].quantile(q=.33),df['salary'].quantile(q=.66),df['salary'].quantile(q=1)+1]

labels = ['low salary', 'medium salary', 'high salary']

df['salary'] = pd.cut(df['salary'], bins = bins, labels=labels)

from sklearn import preprocessing

l_encoder={}

label_encoder = preprocessing.LabelEncoder()

df['salary']= label_encoder.fit_transform(df['salary'])

df

customer_id gender age salary purchased

0 1 0 0 1 0

1 2 0 0 0 0

2 3 0 1 2 0

3 4 1 0 0 0

4 5 1 1 2 0

5 6 0 1 1 0

6 7 1 0 2 0

7 8 1 1 0 0

8 9 1 1 1 0

9 10 1 0 0 0

10 11 0 1 0 0

11 12 0 0 1 0

12 13 1 1 1 0

13 14 1 1 1 0

14 15 1 1 2 1

15 16 1 1 0 0

16 17 1 1 1 0

17 18 0 0 0 0

18 19 0 0 2 0

19 20 0 0 2 0

# input

x = df.iloc[:, :-1]

# output

y = df.iloc[:, 4]

from sklearn.model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split(x, y, test_size = 0.20, random_state = 0)

Separating the customer_id column:

X_train_cust = X_train.pop('customer_id')

X_test_cust = X_test.pop('customer_id')

Fitting a logistic regression model:

from sklearn.linear_model import LogisticRegression

classifier = LogisticRegression(random_state = 0)

classifier.fit(X_train, y_train)

Building a lime chart:

import lime

import lime.lime_tabular

explainer = lime.lime_tabular.LimeTabularExplainer(np.array(X_train),

feature_names=X_train.columns,

verbose=True, mode = 'classification')

exp = explainer.explain_instance(X_test.iloc[0], classifier.predict_proba)

exp.as_pyplot_figure()

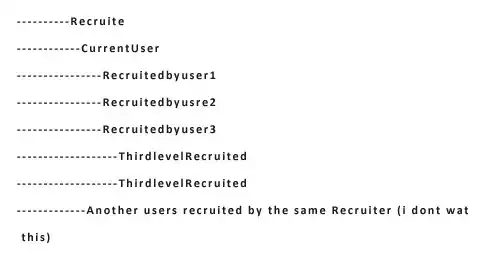

The lime chart displays the encoded features/columns values. But I need the original value. For example, if the lime chart says 0, I need to display it as female.

Could someone please let me know how fix it.