I have a dataset which have an xml column and i am trying to export individual xmls as files with filename being in another column using codeworkbook

I filtered the rows i want using below code

def prepare_input(xml_with_debug):

from pyspark.sql import functions as F

filter_column = "key"

filter_value = "test_key"

df_filtered = xml_with_debug.filter(filter_value == F.col(filter_column))

approx_number_of_rows = 1

sample_percent = float(approx_number_of_rows) / df_filtered.count()

df_sampled = df_filtered.sample(False, sample_percent, seed=0)

important_columns = ["key", "xml"]

return df_sampled.select([F.col(c).cast(F.StringType()).alias(c) for c in important_columns])

It works till here. Now for the last part i tried this in a python task, but was complaining about the parameters (i should have set it up wrongly). But even if it works it will be as a single file i think.

from transforms.api import transform, Input, Output

@transform(

output=Output("/path/to/python_csv"),

my_input=Input("/path/to/input")

)

def my_compute_function(output, my_input):

output.write_dataframe(my_input.dataframe().coalesce(1), output_format="csv", options={"header": "true"})

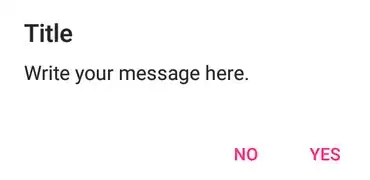

I am trying to set it up in GUI like below

My question i guess is, what will be the code in the last Python task (write_file) after the prepare input so that i extract individual xmls (And if possible zip them into single file for download)