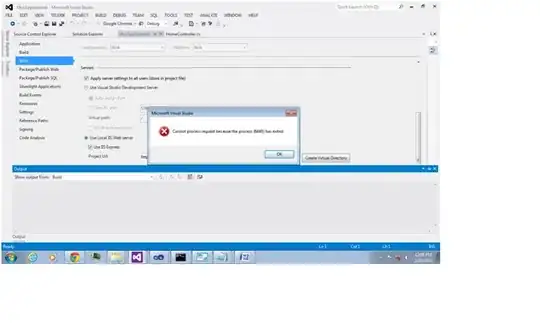

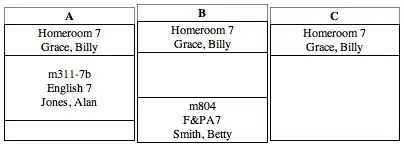

I have a vary simple/toy pipeline where I have a pyspark notebook that has an exit value, a set variable activity set to the exit value and a second notebook that is parameterized with the variable. It looks like the below.

I successfully set the variable, but I cannot seem to read this variable into the input_values notebook. The notebook looks like the below, also quite simple.

My pipeline parameters then look like the below. However my exit value just comes out as "hello "

I have also tried accessing the variable directly in the notebook (without parametrization) by f"hello {@variables("my_message")}", but this resulted in an error. When I try to look at documentation for this type of behavior it generally points to calling a notebook from another notebook so caller/callee notebooks, such as the examples from this documentation. This seems to be backwards for creating a DAG, so I do not want to do that. What I want to do seems possible and I saw an example of something similar here, but I can't seem to get it to work.

Can anyone point me to documentation that would explain how to accomplish what I want or is there something in the above that I am missing?

Edit:

I tried to set themsg parameter to @activity('exit_values').output.status.Output.result.exitValue, which is what I successfully set the my_message variable to and that did not work either.

Success

I don't feel very smart, but I will leave this up for anyone else that might be in the same position. I was setting my msg parameter on the pipeline, not the individual notebook. I successfully ran it by setting the parameter on the notebook.