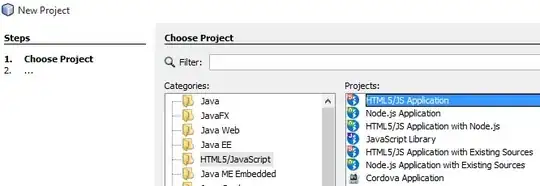

Im running this in Tensorflow 2.7.2 I found this method for training an actor-critic algorithm on the cartpole task. I wanted to see if and how learning could occur after overfitting it on some data. So I want to train until the losses do not decrease any longer for 10 episodes straight. I got into problems as the losses seem to go to minus infinity.

I made some simpler version of the problem which you can see here. This is also the exact code that produced these problems. I already tried making the learning rate smaller and even letting it decrease exponentially. The problem is that the compute_loss function:

num_actions = env.action_space.n # 2

num_hidden_units = 128

model = ActorCritic(num_actions, num_hidden_units)

huber_loss = tf.keras.losses.Huber(reduction=tf.keras.losses.Reduction.SUM)

def compute_loss(

action_probs: tf.Tensor,

values: tf.Tensor,

returns: tf.Tensor) -\> tf.Tensor:

"""Computes the combined Actor-Critic loss."""

\#print(returns.shape, type(returns), values.shape, type(values))

advantage = returns - values

action_log_probs = tf.math.log(action_probs)

actor_loss = -tf.math.reduce_sum(action_log_probs \* advantage)

critic_loss = huber_loss(values, returns)

critic_losslist.append(round(critic_loss.numpy().item(), 4))

actor_losseslist.append(round(actor_loss.numpy().item(), 4))

advantageslist.append(round(tf.math.reduce_sum(advantage).numpy().item(), 4))

valueslist.append(round(tf.math.reduce_sum(values).numpy().item(), 4))

print(len(valueslist), len(advantageslist))

with open("vals5.txt", "a") as f:

f.write("\\nvalues: " + str(values))

f.write("\\nsom values: " + str(tf.math.reduce_sum(values)))

f.write("\\nadvantage: " + str(advantage))

f.write("\\nsom advantage: " + str(tf.math.reduce_sum(advantage)))

f.write("\\nactor loss: " + str(actor_loss))

f.write("\\nreturns = " + str(returns))

f.write("\\ncritic loss =" + str(critic_loss))

f.write("total: " + str(actor_loss + critic_loss))

return actor_loss + critic_loss

For every new call gives back a loss that is decreasing more and more. The actor-losses keep getting smaller (towards negative infinity). You'd think that (since the values become smaller and smaller and thus not being close to the returns any more) that the critic loss would increase more than the actor loss decreases thus making the total loss go more towards 0 again but that is not what happens.

. As you can see the losses keep decreasing because of the actor losses.

. As you can see the losses keep decreasing because of the actor losses.

I added the rest of the code here if for some reason some cannot click the link to the code: Imports:

import collections

import gym

import numpy as np

import statistics

import tensorflow as tf

import tqdm

from tensorflow import keras

from tensorflow.keras import optimizers

from tensorflow.keras.optimizers import schedules

from matplotlib import pyplot as plt

from tensorflow.keras import layers

from typing import Any, List, Sequence, Tuple

from tensorflow.python.ops.numpy_ops import np_config

tf.config.run_functions_eagerly(True)

transitions = 0

# Create the environment

env = gym.make("CartPole-v1")

# Set seed for experiment reproducibility

seed = 42

tf.random.set_seed(seed)

np.random.seed(seed)

# Small epsilon value for stabilizing division operations

eps = np.finfo(np.float32).eps.item()

Inputs:

values = [[ 2.0 ],

[-1 ],

[-1 ],

[-1 ],

[-1 ],

[-1 ],

[-1 ],

[-1 ],

[-1 ],

[-1 ],

[-1 ],

[-1 ],

[-1 ],

[-1 ],

[-1 ],

[-1 ],

[-1 ],

[-1 ],

[-1 ],

[-1 ],

[-1 ],

[-1 ],

[-1 ],

[-1 ],

[-1 ],

[-1 ],

[-1 ],

[-1 ],

[-1 ],

[-1 ]]

values = tf.convert_to_tensor(values)

returns = [[ 2.0 ],

[-1 ],

[-1 ],

[-1 ],

[-1 ],

[-1 ],

[-1 ],

[-1 ],

[-1 ],

[-1 ],

[-1 ],

[-1 ],

[-1 ],

[-1 ],

[-1 ],

[-1 ],

[-1 ],

[-1 ],

[-1 ],

[-1 ],

[-1 ],

[-1 ],

[-1 ],

[-1 ],

[-1 ],

[-1 ],

[-1 ],

[-1 ],

[-1 ],

[-1 ]]

returns = tf.convert_to_tensor(returns)

old_returns = returns

states = [[-0.022757165133953094, 0.040714748203754425, 0.013217215426266193, -0.028028316795825958], [-0.021942870691418648, 0.23564468324184418, 0.012656649574637413, -0.31651192903518677], [-0.017229976132512093, 0.04034476354718208, 0.006326410919427872, -0.019864562898874283], [-0.01642308197915554, 0.23537541925907135, 0.005929119419306517, -0.3105447292327881], [-0.011715573258697987, 0.040169499814510345, -0.0002817753120325506, -0.015997853130102158], [-0.010912182740867138, -0.1549484133720398, -0.0006017323466949165, 0.27659615874290466], [-0.014011151157319546, 0.040182121098041534, 0.004930190742015839, -0.016276495531201363], [-0.013207509182393551, -0.1550101935863495, 0.004604660905897617, 0.27795788645744324], [-0.016307713463902473, 0.04004577174782753, 0.01016381848603487, -0.013269192539155483], [-0.015506797470152378, -0.15522046387195587, 0.009898434393107891, 0.2826031446456909], [-0.018611205741763115, -0.35048219561576843, 0.015550496987998486, 0.5783914923667908], [-0.025620849803090096, -0.15558159351348877, 0.027118327096104622, 0.29064759612083435], [-0.028732482343912125, 0.0391433946788311, 0.03293127939105034, 0.006639310624450445], [-0.027949614450335503, 0.2337779700756073, 0.033064063638448715, -0.2754742205142975], [-0.023274054750800133, 0.4284129738807678, 0.027554580941796303, -0.5575480461120605], [-0.014705795794725418, 0.2329152524471283, 0.016403619199991226, -0.2563127875328064], [-0.01004749070852995, 0.42779919505119324, 0.011277362704277039, -0.5437769293785095], [-0.001491506234742701, 0.6227608919143677, 0.0004018239851575345, -0.8328853845596313], [0.010963710956275463, 0.4276334345340729, -0.016255883499979973, -0.5400761365890503], [0.01951638050377369, 0.6229800581932068, -0.027057405561208725, -0.8378363847732544], [0.03197598084807396, 0.4282378852367401, -0.04381413385272026, -0.5537838935852051], [0.04054073989391327, 0.6239467859268188, -0.05488981306552887, -0.8599427938461304], [0.053019676357507706, 0.8197716474533081, -0.07208866626024246, -1.1693671941757202], [0.06941510736942291, 0.6256576180458069, -0.09547601640224457, -0.9001280665397644], [0.08192826062440872, 0.8219346404075623, -0.11347857117652893, -1.2212300300598145], [0.09836695343255997, 0.6284429430961609, -0.13790316879749298, -0.9661504626274109], [0.11093581467866898, 0.43541547656059265, -0.15722618997097015, -0.7197731733322144], [0.11964412033557892, 0.24277785420417786, -0.1716216504573822, -0.4804151654243469], [0.12449967861175537, 0.4398540258407593, -0.18122994899749756, -0.8218960165977478], [0.13329675793647766, 0.636931300163269, -0.19766786694526672, -1.1656609773635864]]

actions = [1, 0, 1, 0, 0, 1, 0, 1, 0, 0, 1, 1, 1, 1, 0, 1, 1, 0, 1, 0, 1, 1, 0, 1, 0, 0, 0, 1, 1, 0]

print(len(returns))

The other functions:

class ActorCritic(tf.keras.Model):

"""Combined actor-critic network."""

def __init__(

self,

num_actions: int,

num_hidden_units: int):

"""Initialize."""

super().__init__()

self.common = layers.Dense(num_hidden_units, activation="relu")

self.actor = layers.Dense(num_actions)

self.critic = layers.Dense(1)

def call(self, inputs: tf.Tensor) -> Tuple[tf.Tensor, tf.Tensor]:

x = self.common(inputs)

return self.actor(x), self.critic(x)

num_actions = env.action_space.n # 2

num_hidden_units = 128

model = ActorCritic(num_actions, num_hidden_units)

huber_loss = tf.keras.losses.Huber(reduction=tf.keras.losses.Reduction.SUM)

def run_episode_overfit_use_actions(

model: tf.keras.Model,

max_steps: int,

actions: list,

states: list

) -> Tuple[tf.Tensor, tf.Tensor]:

"""Runs a single episode to collect training data."""

action_probs = tf.TensorArray(dtype=tf.float32, size=0, dynamic_size=True)

values = tf.TensorArray(dtype=tf.float32, size=0, dynamic_size=True)

# get the initial state.

initial_state_shape = np.array(states[0]).shape

state = states[0]

count = 0

for t in tf.range(max_steps):

state = states[t]

# Convert state into a batched tensor (batch size = 1)

state = tf.expand_dims(state, 0)

# Run the model and to get action probabilities and critic value

action_logits_t, value = model(state)

# Sample next action from the action probability distribution

#action = tf.random.categorical(action_logits_t, 1)[0, 0]

action_probs_t = tf.nn.softmax(action_logits_t)

# Store critic values

values = values.write(t, tf.squeeze(value))

# Store log probability of the action chosen

action = tf.constant(actions[t], dtype=tf.int64, shape=())

action_probs = action_probs.write(t, action_probs_t[0, action])

# Apply action to the environment to get next state and reward

# note we aren't setting the

# if the episode is done next time, then break

if count >= len(actions)-1:

break

count += 1

action_probs = action_probs.stack()

values = values.stack()

return action_probs, values

And the starting loop that invokes most function calls.

advantageslist = []

actor_losseslist = []

critic_losslist = []

losseslist = []

valueslist = []

lr_schedule = keras.optimizers.schedules.ExponentialDecay(

initial_learning_rate=0.0005,

decay_steps=100,

decay_rate=0.8)

optimizer = tf.keras.optimizers.Adam(learning_rate=0.001)

loss2 = 0

while loss2 > -300.0:

with tf.GradientTape() as tape:

action_probs, values = run_episode_overfit_use_actions(

model, 1000, actions, states)

returns = tf.reshape(old_returns, (old_returns.shape[0],1))

action_probs, values = [

tf.expand_dims(x, 1) for x in [action_probs, values]]

loss = compute_loss(action_probs, values, returns)

loss2 = round(loss.numpy().item(), 4) # convert the loss into noraml python float

losseslist.append(loss2)

grads = tape.gradient(loss, model.trainable_variables)

optimizer.apply_gradients(zip(grads, model.trainable_variables))

import matplotlib.pyplot as plt

ls = list(range(len(losseslist)))

plt.plot(ls, valueslist)

plt.title("values")

plt.show()

plt.plot(ls, losseslist)

plt.title("losses")

plt.show()

plt.plot(ls, advantageslist)

plt.title("advantages")

plt.show()

plt.plot(ls, actor_losseslist)

plt.title("actorlosses")

plt.show()

plt.plot(ls, critic_losslist)

plt.title("critic losses")

plt.show()

It's a very strange thing I did not at all expect. I hope anyone knows what exactly is happening here. Thanks in advance!