With the announcing of change data capture in ADF comes various questions. I tried hand's on the same, and came across various scenarios.

- Implemented multiple tables from source to target, where source was On-premises SQL Server and sink was Azure SQL Database.

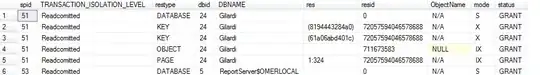

- In monitor tab I tried to read changes read and write but didn't get how that are counted while INSERT, UPDATE, DELETE operation.

- If I'm inserting the single data in the source table, the changes read in the monitor tab is displaying 4 changes.

- And when I perform Delete operation, that change is not read and written.

So, overall, I'm facing difficulty how the changes count are calculated. Can anybody explain this process of count calculation.

Please find below the screenshot for the same:- (https://i.stack.imgur.com/iLtT5.png)