I am currently using an Azure Durable Function (Orchestrator) to orchestrate a process that involves creating a job in Azure Databricks. The Durable Function creates the job using the REST API of Azure Databricks and provides a callback URL. Once the job is created, the orchestrator waits indefinitely for the external event (callback) to be triggered, indicating the completion of the job (callback pattern). The job in Azure Databricks is wrapped in a try:except block to ensure that a status (success/failure) is reported back to the orchestrator no matter the outcome.

However, I am concerned about the scenario where the job status turns to Internal Error, and the piece of code is not executed, leaving the orchestrator waiting indefinitely. To ensure reliability, I am considering several solutions:

- Setting a timeout on the orchestrator

- Polling: Checking the status of the job every x minutes

- Using an event-driven architecture by writing an event to a topic (e.g. Azure Event Grid) and having a separate service subscribe to it

My question is, can I send events to a topic (Azure Event Grid) when the Databricks job completes (succeeds, fails, errors, every possible outcome) to ensure that the orchestrator is notified and can take appropriate action? Looking at the REST API Jobs 2.1 docs, I can get notified via email or a specify a webhook on start, success and failure (Preview Feature). Can I enter the topic URL of Event Grid here so that Databricks writes events to it? Docs to manage notification destinations. It's not clear to me. Is there another way in Azure to achieve the same result?

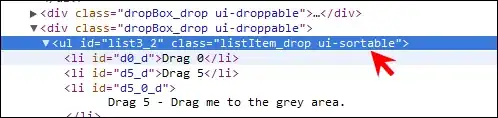

Edit: I've looked into the documentation to find how to manage notification destinations and created a new system notification:

However, when testing the connection:

401: Request must contain one of the following authorization signature: aeg-sas-token, aeg-sas-key. Report '12345678-7678-4ab9-b90f-37aabf1b10b8:7:1/23/2023 6:17:09 PM (UTC)' to our forums for assistance or raise a support ticket.

The same would happen if there was a POST request from another client (e.g. Postman). Now the question is: How can I provide a token so that Databricks can write events to a topic?

I've posted this question also here: Webhook Security (Bearer Auth)