We are having occasional issues with a Java legacy application consuming too much memory (and - no - there is no memory leak involved here). The application runs on a VM that has been assigned 16 GB of memory, 14 of which are assigned to the application.

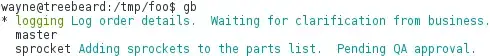

Using the "Melody" monitor we observe e.g. this memory (heap) usage behavior:

The left, low part (at around 2 GB) is a lazy Sunday, where the application essentially idled, while the right hand side is Monday morning where we got some activity and memory consumption reached about 11 GB, which looks fairly normal and uncritical for our application.

However, the physical memory consumption view looks like this:

Here the machine/VM (which runs only our application) "idles" at almost 12GB and when consumption rises the machine hits the 16 GB limit, it starts to swap (visible on another view not shown here) and after about 15' at >100% memory consumption the system's "out-of-memory killer" strikes and terminates the process. The following blank section is showing the period during which the application was down and we were analyzing a couple of things (including taking these screenshots) before we restarted it.

My question is: can one tweak the Java VM (v1.8.0_121 in our case) to return (part of) the physical memory that it is apparently hogging?

My gut feeling is that if the VM would have returned at least part of that RAM that it isn't needing any more (at least judging from the first diagram) then the system would have had more margin for the following period of high load.

If - referring to the example shown - the physical memory would have been decreased from ~12 GB to, say, 6 GB or less during the day-long idle phase then the peak at right would probably not have gone beyond the 16 GB limit and the application would probably not have crashed.

Any ideas or suggestions on this? What are suitable JVM options to force a return of unused memory - at least after some longer low-memory period as we see here?

We are currently using these VM options regarding memory tweaking:

-server -XX:+UseParallelGC -XX:GCTimeRatio=40