I intend to create a data pipeline in Airflow that reads data from a source and perform multiple operations on the data in sequence and outputs a file.

Example 1 payload to rest api :

{ 'inputFileLocation': '[input s3 key]' , 'outputFileLocation': '[output s3 key]',

'args': { 1: {'operation': 'add', 'column': 'A', 'param': 5},

2: {'operation': 'log', 'column': 'B', 'param': 5, 'base': 10},

3: {'operation': 'factorial', 'column': 'C'}

}

}

Example 2 payload to rest api :

{ 'inputFileLocation': '[input s3 key]' , 'outputFileLocation': '[output s3 key]',

'args': { 1: {'operation': 'factorial', 'column': 'A'},

2: {'operation': 'log', 'column': 'B', 'param': 5, 'base': 10}

}

}

Probable Solutions:

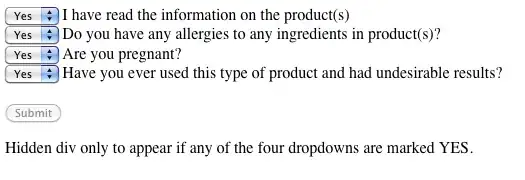

Dynamic Tasks created based on size of 'args' : The limitation to this design is that old dag runs gets updated with the recent dag structure. This makes it complicated to debug historical runs in case of any failures

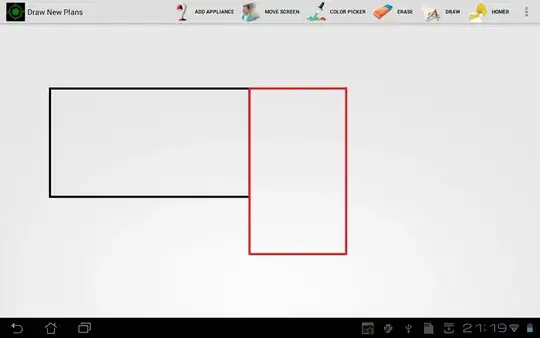

Static Tasks defined and executed in a loop by branching : The limitation to this design is that it takes away the graphical representation of a sequential pipeline and is not "acyclic". Something like this in following diagram

Since a graphical representation of what operations were performed in what sequence and correct state of old dag runs is essential, I am at a fix with this use case.

I am not sure if and how task groups or segregating code in multiple dags would help in this use case.

Please help with design options.