I am using Python code in Databricks to read a JSON using the below code.

my_json = ''

with open('/dbfs/mnt/my_mount/my_json.json', 'r') as fp:

my_json = json.load(fp)

But I am getting the below error

FileNotFoundError: [Errno 2] No such file or directory: '/dbfs/mnt/my_mount/my_json.json'

However, if I try to read using a Spark Dataframe, I am able to read contents of the json file and verified using .show() method of Spark Dataframe.

df = spark.read.text('/dbfs/mnt/my_mount/my_json.json', wholetext=True)

How can I read the file using with open?

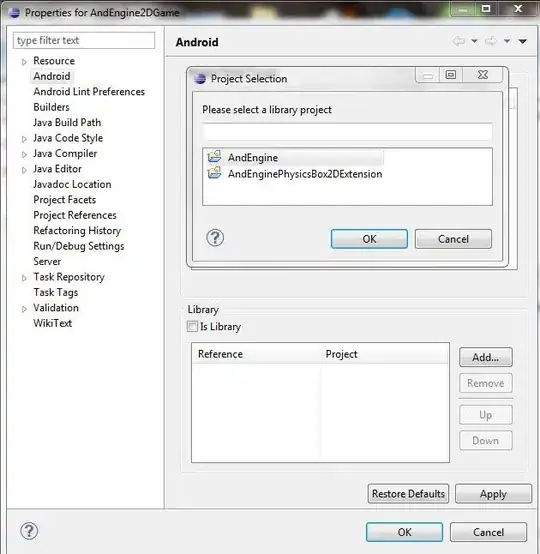

Below is the Databricks Runtime I am using.

Please let me know if you need any further details.