As cmn is a numpy array, seaborn doesn't know about the names of the rows and columns. The defaults are 0,1,2,.... It also helps to make sure both arrays y_pred and y_true are of the same integer type. E.g. y_true = y_true.astype(int).

Scikit-learn provides the function unique_labels to fetch the labels it used.

You can temporarily suppress the warning for division by zero via with np.errstate(invalid='ignore'):.

For testing, you could create some simple arrays which are easy to count manually, and investigate how confusion_matrix(y_true, y_pred) works in that case.

from sklearn.metrics import confusion_matrix

from sklearn.utils.multiclass import unique_labels

from matplotlib import pyplot as plt

import seaborn as sns

import numpy as np

y_true = [3, 3, 3, 3, 1, 1]

y_pred = [7, 3, 3, 1, 2, 1]

# make sure both arrays are of the same type

y_true = np.array(y_true).astype(int)

y_pred = np.array(y_pred).astype(int)

cf_matrix = confusion_matrix(y_true, y_pred)

with np.errstate(invalid='ignore'):

cmn = cf_matrix.astype('float') / cf_matrix.sum(axis=1, keepdims=True)

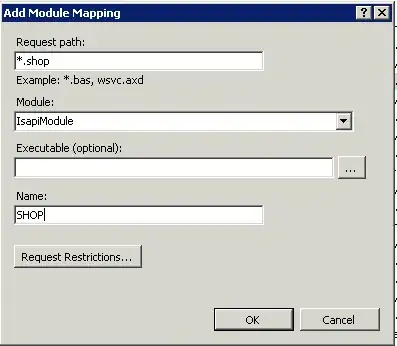

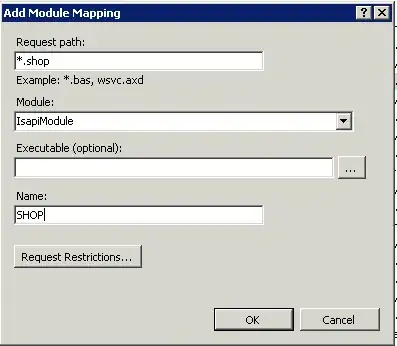

fig, (ax1, ax2) = plt.subplots(ncols=2, figsize=(12, 6))

sns.set()

sns.heatmap(cmn, annot=True, fmt='.1f', annot_kws={"fontsize": 14},

linewidths=2, linecolor='black', clip_on=False, ax=ax1)

ax1.set_title('using default labels', fontsize=16)

labels = unique_labels(y_true, y_pred)

sns.heatmap(cmn, xticklabels=labels, yticklabels=labels,

annot=True, fmt='.1f', annot_kws={"fontsize": 14},

linewidths=2, linecolor='black', clip_on=False, ax=ax2)

ax2.set_title('using the same labels as sk-learn', fontsize=16)

for ax in (ax1, ax2):

ax.tick_params(labelsize=20, rotation=0)

ax.set_xlabel('Predicted value', fontsize=18)

ax.set_ylabel('True value', fontsize=18)

plt.tight_layout()

plt.show()