For built-in algorithms

In the case of built-in algorithms, I refer to the official "Define Metrics" guide (chapter "Using a built-in algorithm for training").

For Custom algorithms

The problem is solved basically in 2 steps:

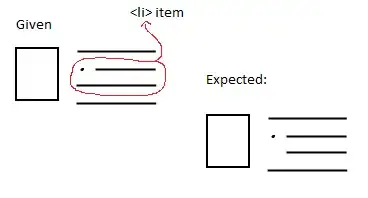

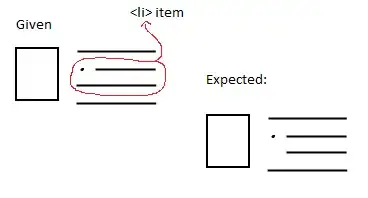

Within your script (e.g., the training script) you will need to log

the metric to intercept.

Trivially a print/log:

print(f"New best val_loss score: {your_metric}")

Within the definition of your pipeline component you should set the metric_definitions parameter.

For example in Estimators.

metric_definitions (list[dict[str, str] or list[dict[str,

PipelineVariable]]) – A list of dictionaries that defines the

metric(s) used to evaluate the training jobs. Each dictionary contains

two keys: ‘Name’ for the name of the metric, and ‘Regex’ for the

regular expression used to extract the metric from the logs. This

should be defined only for jobs that don’t use an Amazon algorithm.

To use it for the above example, it will then suffice to define:

metric_definitions=[

{'Name': 'val_loss', 'Regex': 'New best val_loss score: ([0-9\.]+)'}

]

P.S.: Remember that estimators also mean derived classes such as SKLearn, PyTorch, etc...

At this point, at the reference step where you have defined the metrics to be intercepted, you will find a key-value pair, of the last value intercepted, in the SageMaker Studio screen and also a graph to monitor progress (even during training) in cloudwatch metrics.