I am trying to have events in a DynamoDB table trigger Lambda function that moves the events into Kinesis Data Firehose. Kinesis then batches the files and send them to an S3 bucket. The Lambda function I am using as the trigger fails.

This is the Lambda code for the trigger:

```

import json

import boto3

firehose_client = boto3.client('firehose')

def lambda_handler(event, context):

resultString = ""

for record in event['Records']:

parsedRecord = parseRawRecord(record['dynamodb'])

resultString = resultString + json.dumps(parsedRecord) + "\n"

print(resultString)

response = firehose_client.put_record(

DeliveryStreamName="OrdersAuditFirehose",

Record={

'Data': resultString

}

)

def parseRawRecord(record):

result = {}

result["orderId"] = record['NewImage']['orderId']['S']

result["state"] = record['NewImage']['state']['S']

result["lastUpdatedDate"] = record['NewImage']['lastUpdatedDate']['N']

return result

```

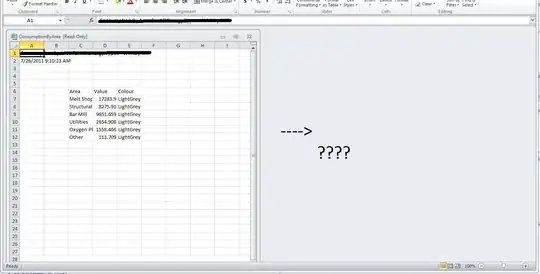

Edit: Cloudwatch Log

Edit: Cloudwatch Log

The goal is to get the lambda function to move events to Kinesis triggered by events in DynamoDB

Edit2: Cloudwatch