I need a linked list to be able to add items at both sides. The list will hold data to be shown in a trend viewer. As there is a huge amount of data I need to show data before its completely read so what I want to do is to read a block of data that I know has been already written and while I read that block have two threads filling the sides of the collection:

I thought on using LinkedList but it says that is does not support this scenario. Any ideas on something at the Framework that can help me or will I have to develop my custom list from scratch?

Thanks in advance.

EDIT: The main idea of the solution is to do it without locking anything because I'm reading a piece of the list that is not going to be changed while writing at other places. I mean, the read Thread will only read one chunk (from A to B) (a section that has been already written). When it finishes and other chunks have been completely written the reader will read those chunks while the writters write new data.

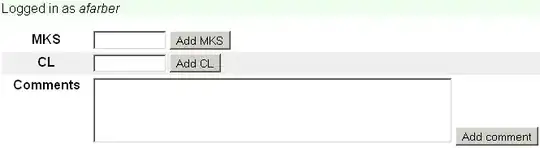

See the updated diagram: