If your Blob trigger is in Data factory, logic apps or Synapse, you can give .csv as suffix as suggested by @Joel Charan in comments.

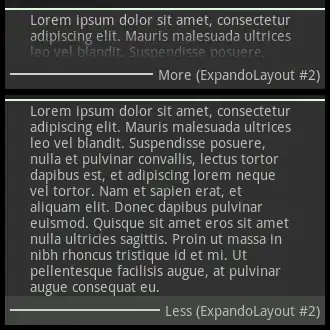

Example in Data factory Blob trigger

_SUCCESS", "_commited" , "_started"

These files will be created by default in spark.

If you want to avoid them and only store a single csv file, another alternative would be to convert Pyspark dataframe into pandas dataframe and store it in a single csv file after mounting.

Code for generating dynamic csv file name using date. Getting current date in string format code taken from this answer by stack0114106.

from pyspark.sql.functions import current_timestamp

dateFormat = "%Y%m%d_%H%M"

ts=spark.sql(""" select current_timestamp() as ctime """).collect()[0]["ctime"]

sub_fname=ts.strftime(dateFormat)

filename="/dbfs/mnt/data/folder1/part-"+sub_fname+".csv"

print(filename)

pandas_converted=df.toPandas()

pandas_converted.to_csv(filename)

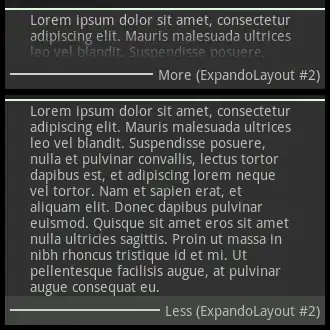

Single csv file in Blob: