Running the following code

from torchvision import models

dnet121 = models.densenet121(pretrained = True)

dnet121

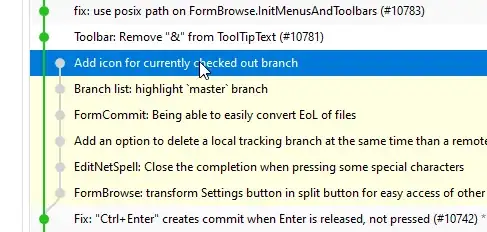

yields a DenseNet121 description which ends as follows :

Based on this, I would appreciate your assistance of the following:

As per PyTorch BatchNorm2d documentation https://pytorch.org/docs/stable/generated/torch.nn.BatchNorm2d.html the BatchNorm2d transform accepts a 4-dim tensor (here with channels C=1024) and outputs a 4-dim tensor. But, as per above image, how does the output 4-dim tensor convert to the 1024 in_features of the Linear layer ? Is it due to a Global Average Pooling layer ?

As per DenseNet official paper https://arxiv.org/pdf/1608.06993.pdf the classifier starts indeed with a Global Average Pooling (GAP) layer, but why can't we see it here?

For the purposes of a task, I would like to replace the GAP layer with a nn.Flatten layer. How can I get code access to the GAP layer though?