I am trying to understand the spark ui and hdfs ui while using pyspark. Following are my properties for the Session that I am running

pyspark --master yarn --num-executors 4 --executor-memory 6G --executor-cores 3 --conf spark.dynamicAllocation.enabled=false --conf spark.exector.memoryOverhead=2G --conf spark.memory.offHeap.size=2G --conf spark.pyspark.memory=2G

I ran a simple code to read a file (~9 GB on disk) in the memory twice. And, then merge the two files and persist the results and ran a count action.

#Reading the same file twice

df_sales = spark.read.option("format","parquet").option("header",True).option("inferSchema",True).load("gs://monsoon-credittech.appspot.com/spark_datasets/sales_parquet")

df_sales_copy = spark.read.option("format","parquet").option("header",True).option("inferSchema",True).load("gs://monsoon-credittech.appspot.com/spark_datasets/sales_parquet")

#caching one

from pyspark import StorageLevel

df_sales = df_sales.persist(StorageLevel.MEMORY_AND_DISK)

#merging the two read files

df_merged = df_sales.join(df_sales_copy,df_sales.order_id==df_sales_copy.order_id,'inner')

df_merged = df_merged.persist(StorageLevel.MEMORY_AND_DISK)

#calling an action to trigger the transformations

df_merged.count()

I expect:

- The data is to first be persisted in the Memory and then on disk

- The HDFS capacity to be utilized at least to the extent that the data persist spilled the data on disk

Both of these expectations are failing in the monitoring that follows:

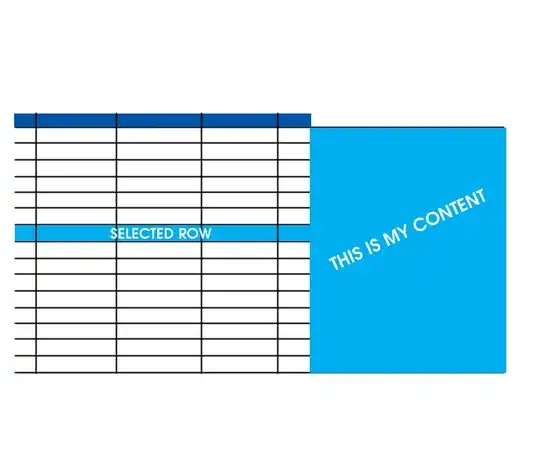

Expectation 1: Failed. Actually, the data is being persisted on disk first and then in memory maybe. Not sure. The following image should help. Definitely not in the disk first unless im missing something

Expectation 2: Failed. The HDFS capacity is not at all used up (only 1.97 GB)

Can you please help me reconcile my understanding and tell me where I'm wrong in expecting the mentioned behaviour and what it actually is that I'm looking at in those images?