Is it right to have the query API (read) service & the event-complete-handler (write) operate on the same database with both dependent on the DB schema? Or is it better to have the query-api read from the replica DB?

"Right" is a loaded term. The idea behind CQRS is that the pattern can allow you to separate commands and queries so that your system can be distributed and scaled out. Typically they would be using different databases in a SOA/Microservice architecture. One service would process the command which produces an event on the service bus. Query handlers would listen to this event to change their data for querying.

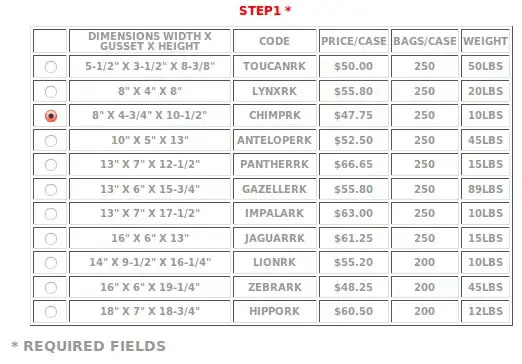

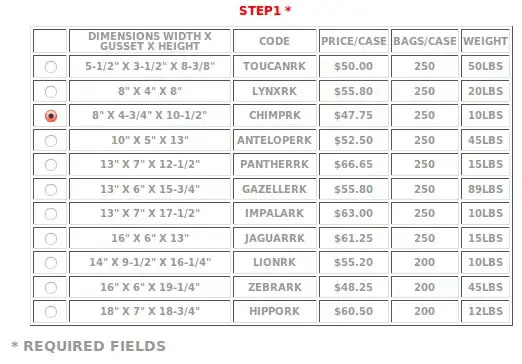

For example:

A service which process the CreateWidgetCommand would produce an event onto the bus with the properties of the command.

Any query services which are interested widgets for producing their data views would subscribe to this event type.

When the event is produced, the subscribed query handlers will consume the event and update their respective databases.

When the query is invoked, their interrogate their own database.

This means you could, in theory, make the command handler as simple as throwing the event onto the bus.

The core-business-logic, at the end of computation, writes only to database and not to db+Kafka in a single transaction. Persisting to the database is handled by the event-complete-handler. Is this approach better?

No. If you question is about the transactionality of distributed systems, you cannot rely on traditional transactions, since any commands may be affecting any number of distributed data stores. The way transactionality is handled in distributed systems is often with a compensating transaction, where you code the steps to reverse the mutations made from consuming the bus messages.

Say in the future, if the core-business-logic needs to query the database to do the computation on every event, can it directly read from the database? Again, does it not create DB schema dependency between the services?

If you follow the advice in the first response, the approach here should be obvious. All distinct queries are built from their own database, which are kept "eventually consistent" by consuming events from the bus.

Typically these architectures have major complexity downsides, especially if you are concerned with consistency and transactionality.

People don't generally implement this type of architecture unless there is a specific need.

You can however design your code around CQRS and DDD so that in the future, transitioning to this type of architecture can be relatively painless.

The topic of DDD is too dense for this answer. I encourage you to do some independent learning.