there are many techniques not only the model

- Increase the size of data, which also creates significant inputs.

- Use features extraction functions and preprocessing of data, MFCC, Furriers, and Data input transforming ( blur, rotates, flipped, paddings, zooms, or random noises ).

- Create multiple sets of data inputs and training K-folded validation.

- Random data selection, saved and load model or performance callbacks parameter adjusting.

- Compares between models or model concatenated.

Sample: My image recognitions templates, useful when performing functions and conversations for solutions.

import os

from os.path import exists

import tensorflow as tf

import tensorflow_io as tfio

import pandas as pd

import matplotlib.pyplot as plt

"""""""""""""""""""""""""""""""""""""""""""""""""""""""""

[PhysicalDevice(name='/physical_device:GPU:0', device_type='GPU')]

None

"""""""""""""""""""""""""""""""""""""""""""""""""""""""""

physical_devices = tf.config.experimental.list_physical_devices('GPU')

assert len(physical_devices) > 0, "Not enough GPU hardware devices available"

config = tf.config.experimental.set_memory_growth(physical_devices[0], True)

print(physical_devices)

print(config)

"""""""""""""""""""""""""""""""""""""""""""""""""""""""""

: Variables

"""""""""""""""""""""""""""""""""""""""""""""""""""""""""

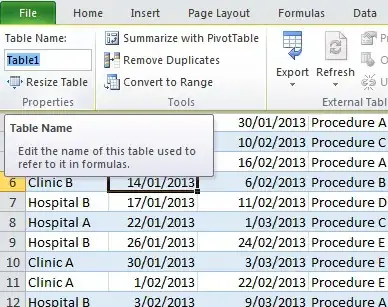

variables = pd.read_excel('F:\\temp\\Python\\excel\\Book 13 (2) (3).xlsx', index_col=None, header=[0])

list_label = [ ]

list_Image = [ ]

list_file_actual = [ ]

list_label_actual = [ 'Candidt Kibt', 'Candidt Kibt', 'Candidt Kibt', 'Candidt Kibt', 'Candidt Kibt', 'Pikaploy', 'Pikaploy', 'Pikaploy', 'Pikaploy', 'Pikaploy' ]

for Index, Image, Label in variables.values:

print( Label )

# list_label.append( Label )

image = tf.io.read_file( Image )

image = tf.io.decode_image(image)

list_file_actual.append(image)

image = tf.image.resize(image, [32,32], method='nearest')

list_Image.append(image)

if Label == 0:

list_label.append(0)

else:

list_label.append(9)

# if Label == 0:

# list_label_actual.append('Candidt Kibt')

# else:

# list_label_actual.append('Pikaploy')

list_label = tf.cast( list_label, dtype=tf.int32 )

list_label = tf.constant( list_label, shape=( 54, 1, 1 ) )

list_Image = tf.cast( list_Image, dtype=tf.int32 )

list_Image = tf.constant( list_Image, shape=( 54, 1, 32, 32, 3 ) )

# print( list_label_actual )

# print( list_label )

checkpoint_path = "F:\\models\\checkpoint\\" + os.path.basename(__file__).split('.')[0] + "\\TF_DataSets_01.h5"

checkpoint_dir = os.path.dirname(checkpoint_path)

loggings = "F:\\models\\checkpoint\\" + os.path.basename(__file__).split('.')[0] + "\\loggings.log"

if not exists(checkpoint_dir) :

os.mkdir(checkpoint_dir)

print("Create directory: " + checkpoint_dir)

log_dir = checkpoint_dir

"""""""""""""""""""""""""""""""""""""""""""""""""""""""""

: DataSet

"""""""""""""""""""""""""""""""""""""""""""""""""""""""""

dataset = tf.data.Dataset.from_tensor_slices(( list_Image, list_label ))

list_Image = tf.constant( list_Image, shape=( 54, 32, 32, 3) ).numpy()

print( "===========================================" )

print( "type of variables: " )

print( type(variables) )

print( variables )

print( "variables.values: " )

print( variables.values )

"""""""""""""""""""""""""""""""""""""""""""""""""""""""""

: Model Initialize

"""""""""""""""""""""""""""""""""""""""""""""""""""""""""

model = tf.keras.models.Sequential([

tf.keras.layers.InputLayer(input_shape=( 32, 32, 3 )),

tf.keras.layers.Normalization(mean=3., variance=2.),

tf.keras.layers.Normalization(mean=4., variance=6.),

tf.keras.layers.Conv2D(32, (3, 3), activation='relu'),

tf.keras.layers.MaxPooling2D((2, 2)),

tf.keras.layers.Dense(512, activation='relu'),

tf.keras.layers.Dropout(0.3),

tf.keras.layers.Reshape((512, 225)),

tf.keras.layers.Bidirectional(tf.keras.layers.LSTM(96, return_sequences=True, return_state=False)),

tf.keras.layers.Bidirectional(tf.keras.layers.LSTM(96)),

tf.keras.layers.Flatten(),

tf.keras.layers.Dense(192, activation='relu'),

tf.keras.layers.Dense(10),

])

"""""""""""""""""""""""""""""""""""""""""""""""""""""""""

: FileWriter

"""""""""""""""""""""""""""""""""""""""""""""""""""""""""

if exists(checkpoint_path) :

model.load_weights(checkpoint_path)

print("model load: " + checkpoint_path)

input("Press Any Key!")

"""""""""""""""""""""""""""""""""""""""""""""""""""""""""

: Callback

"""""""""""""""""""""""""""""""""""""""""""""""""""""""""

class custom_callback(tf.keras.callbacks.Callback):

def on_epoch_end(self, epoch, logs={}):

if( logs['accuracy'] >= 0.97 ):

self.model.stop_training = True

custom_callback = custom_callback()

"""""""""""""""""""""""""""""""""""""""""""""""""""""""""

: Optimizer

"""""""""""""""""""""""""""""""""""""""""""""""""""""""""

optimizer = tf.keras.optimizers.Nadam(

learning_rate=0.000001, beta_1=0.9, beta_2=0.999, epsilon=1e-07,

name='Nadam'

)

"""""""""""""""""""""""""""""""""""""""""""""""""""""""""

: Loss Fn

"""""""""""""""""""""""""""""""""""""""""""""""""""""""""

lossfn = tf.keras.losses.SparseCategoricalCrossentropy(

from_logits=False,

reduction=tf.keras.losses.Reduction.AUTO,

name='sparse_categorical_crossentropy'

)

"""""""""""""""""""""""""""""""""""""""""""""""""""""""""

: Model Summary

"""""""""""""""""""""""""""""""""""""""""""""""""""""""""

model.compile(optimizer=optimizer, loss=lossfn, metrics=['accuracy'] )

model.save_weights(checkpoint_path)

"""""""""""""""""""""""""""""""""""""""""""""""""""""""""

: Training

"""""""""""""""""""""""""""""""""""""""""""""""""""""""""

history = model.fit( dataset, batch_size=120, epochs=10000, callbacks=[custom_callback] )

plt.figure(figsize=(6, 6))

plt.title("Actors recognitions")

for i in range(30):

img = tf.keras.preprocessing.image.array_to_img(

list_Image[i],

data_format=None,

scale=True

)

img_array = tf.keras.preprocessing.image.img_to_array(img)

img_array = tf.expand_dims(img_array, 0)

predictions = model.predict(img_array)

score = tf.nn.softmax(predictions[0])

plt.subplot(6, 6, i + 1)

plt.xticks([])

plt.yticks([])

plt.grid(False)

plt.imshow(list_file_actual[i])

plt.xlabel(str(round(score[tf.math.argmax(score).numpy()].numpy(), 2)) + ":" + str(list_label_actual[tf.math.argmax(score)]))

plt.show()

input('...')

Output: They are actually Thailand actors, broadcasts collected from the Internet.

Sample