I'm trying to identify regions of various UI elements in a video game. I am trying to use OpenCV template matching to accomplish this. These UI elements often contain varying graphics/text/icons, so it's not as easy as matching a generic template. Therefore, my plan was to make templates with transparency - where I make non-static parts of the UI transparent so that they are ignored during the template matching. My script is working for most instances, except when there are many black pixels on screen.

I've considered both: How do I find an image on screen ignoring transparent pixels & How to template match a simple 2D shape in OpenCV?

Here is a sample screenshot of the game view.

Here are some of the transparent templates I'm using to identify regions of UI elements:

All of these templates work with the following script - EXCEPT when there is an excessive amount of black pixels on screen:

''' mostly based on: https://stackoverflow.com/questions/71302061/how-do-i-find-an-image-on-screen-ignoring-transparent-pixels/71302306#71302306'''

import cv2

import time

import math

# read game image

img = cv2.imread('base.png')

# read image template

template = cv2.imread('minimap.png', cv2.IMREAD_UNCHANGED)

hh, ww = template.shape[:2]

# extract base image and alpha channel and make alpha 3 channels

base = template[:,:,0:3]

alpha = template[:,:,3]

alpha = cv2.merge([alpha,alpha,alpha])

# do masked template matching and save correlation image

correlation = cv2.matchTemplate(img, base, cv2.TM_CCORR_NORMED, mask=alpha)

# set threshold and get all matches

threshold = 0.90

''' from: https://stackoverflow.com/questions/61779288/how-to-template-match-a-simple-2d-shape-in-opencv/61780200#61780200 '''

# search for max score

result = img.copy()

max_val = 1

rad = int(math.sqrt(hh*hh+ww*ww)/4)

# find max value of correlation image

min_val, max_val, min_loc, max_loc = cv2.minMaxLoc(correlation)

print(max_val, max_loc)

if max_val > threshold:

# draw match on copy of input

cv2.rectangle(result, max_loc, (max_loc[0]+ww, max_loc[1]+hh), (0,0,255), 1)

# save results

cv2.imwrite('match.jpg', result)

cv2.imshow('result', result)

cv2.waitKey(0)

else:

print("No match found")

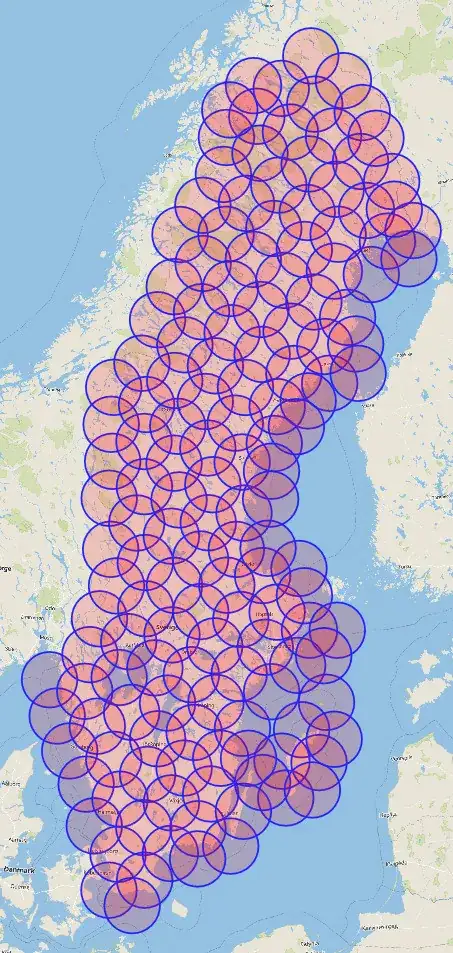

Here is the result of searching for the minimap when black pixels are present:

Here is the result when there are fewer black on screen:

Admittedly, I don't quite understand how the template matching algorithm is treating the alpha layer, so I'm not sure why the black pixels are interfering with the search. Can anyone explain?