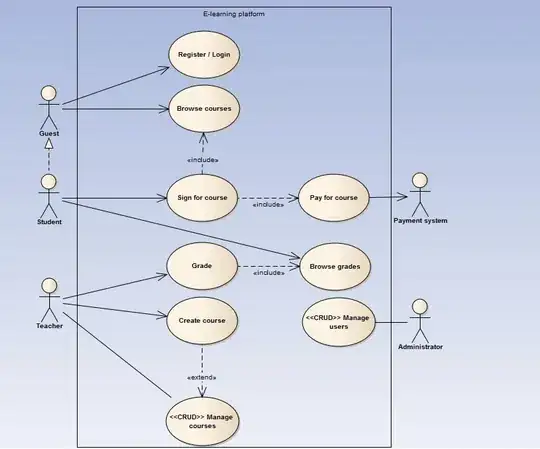

I'm working on a project to detect gaze direction using webcam feed in Python. Using the dlib library and OpenCV and using some masking techniques I can get a 60 fps feed of images of my eye like the one shown below.

What I'm trying to do is find the coordinates of the center of the iris on every image as they come in and return it to the main method.

The approach that I'm following is dividing each of these frames in eight equal squares, calculating the proportion of black to white pixels inside each one, and multiplying the vector (direction) from the center of the frame to that square by that number.

Unfortunately, unless the pupil is relatively centered in the frame, this approach tends to overshoot significantly. I'm thinking of adding an oval mask around the frame to remove all the shadows, but wanted to see if the community has other ideas on how I can detect the center of the eye.

Here's what I would like the output to be:

And here's what the current algorithm is outputting:

Any ideas are greatly appreciated!