I have a pod with a cron job container and a never ending container.

is there a way to mark the pod as successfull when the cron job ends?

The problem is that with the never ending container in the same pod of the cron job the pod remains always in active status and I'd like to terminate it with success. There was a faliure in a node and when restarting it the cron job has started two times in the same days and I want to also avoid this.

I found a solution with activeDeadlinesSeconds but with this porperty the pod goes in failed status as read on the docs.

apiVersion: batch/v1

kind: CronJob

metadata:

name: hello

spec:

schedule: "*/1 * * * *"

concurrencyPolicy: Replace

jobTemplate:

spec:

ttlSecondsAfterFinished: 30

activeDeadlineSeconds: 10

template:

spec:

hostAliases:

- ip: "192.168.59.1"

hostnames:

- "host.minikube.internal"

containers:

- name: webapp1

image: katacoda/docker-http-server:latest

ports:

- containerPort: 80

- name: hello

image: quarkus-test

imagePullPolicy: Never

command: ["./application", "-Dquarkus.http.host=0.0.0.0"]

restartPolicy: OnFailure

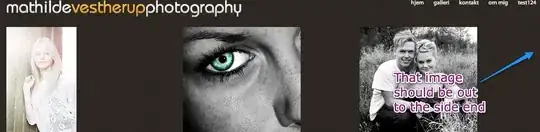

As you can see in this image the pod start, after 10 seconds with activeDeadlineSeconds the pod is putted in failed status, and other after 30 seconds with ttlSecondsAfterFinished the pod is deleted.

As you can see here the cron job is putted from active 1 to active 0.

If I don't use activeDeadlineSeconds the cron job remains always active.

I've read about a solution using volumes between the two containers and writing a file when the cron job ends but I canno't touch the never ending code/container is not under my control.