I am trying to fit a model to my data, which has a dependent variable that can be 0 or 1.

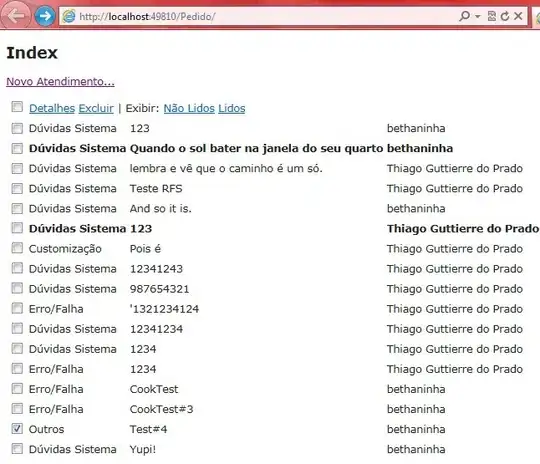

I tried to fit a binomial glmer to the data, but the fit is pretty bad as you can see below. This puzzles me because this is quite a sigmoid so I thought I would get a great fit with that kind of model? Am I using the wrong model?

(color is my data, black is the fit)

Here is the code I used on r

library(lme4)

library(ggplot2)

exdata <- read.csv("https://raw.githubusercontent.com/FlorianLeprevost/dummydata/main/exdata.csv")

model=glmer(VD~ as.factor(VI2)*VI1 + (1|ID),exdata,

family=binomial(link = "logit"),

control = glmerControl(optimizer = "bobyqa", optCtrl = list(maxfun=2e5)))

summary(model)

exdata$fit=predict(model, type = "response")

ggplot(exdata,aes(VI1, VD, color=as.factor(VI2),

group=as.factor(VI2))) +

stat_summary(geom="line", size=0.8) +

stat_summary(aes(y=(fit)),geom="line", size=0.8, color="black") +

theme_bw()

And I tried without the random effect to see if it would change but no...

ggplot(exdata, aes(x=VI1, y=VD, color=as.factor(VI2),

group=as.factor(VI2))) +

stat_summary(fun.data=mean_se, geom="line", size=1)+

stat_smooth(method="glm", se=FALSE, method.args = list(family=binomial), color='black')

Here is the data:https://github.com/FlorianLeprevost/dummydata/blob/main/exdata.csv