Your thresholding result looks fine to me. findContours() plus boundingRect() would clean up the black parts of each camera view. contourArea() could be used to reject small white parts from becoming their own camera view.

So, for example, here's how to run findContours():

# This is your post-thresholding image

img_orig = cv2.imread('test183_image.png')

img_gray = cv2.cvtColor(img_orig, cv2.COLOR_BGR2GRAY)

# Find the contours

contours, hierarchy = cv2.findContours(img_gray, cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_SIMPLE)

# Draw them for debug purposes

img = img_orig.copy()

cv2.drawContours(img, contours, -1, (0, 255, 0), 10)

plt.imshow(img)

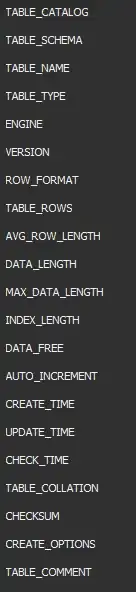

Output:

There's a seventh contour here, in the upper left corner of the image. It can be filtered out like this:

# Reject any contour smaller than min_area

min_area = 20000 # in square pixels

contours = [contour for contour in contours if cv2.contourArea(contour) >= min_area]

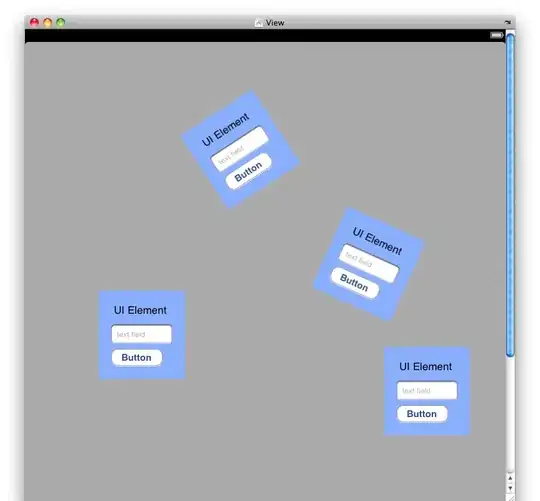

Output:

The next step is to find the minimum bounding rectangle for each camera using boundingRect():

# Get bounding rectangle for each contour

bounding_rects = [cv2.boundingRect(contour) for contour in contours]

# Display each rectangle

img = img_orig.copy()

for rect in bounding_rects:

x,y,w,h = rect

cv2.rectangle(img,(x,y),(x+w,y+h),(0,255,0),10)

plt.imshow(img)

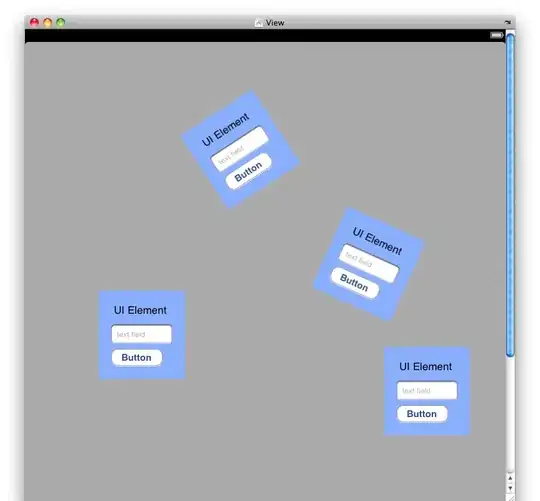

Output:

In the bounding_rects list, you now have the x, y, width, and height of every camera.