I am currently using a micro services architecture and under high load (around 1000 requests per second), the services become drastically slow and most of the components that use database keep restarting due to the error

javax.persistence.PersistenceException: org.hibernate.exception.GenericJDBCException: Unable to acquire JDBC Connection

Hikari Logs

DEBUG HikariPool:411 - HikariPool-3 - Pool stats (total=20, active=20, idle=0, waiting=234)

DEBUG HikariPool:411 - HikariPool-3 - Pool stats (total=20, active=20, idle=0, waiting=240)

Symptoms

- Database CPU maxes out

- Slowness of services

- Service downtime due to restarts

- Number of waiting on hikari pool increases

Technologies

- Kubernetes

- Spring cloud

- Hikari for spring boot jdbc pool

- Hibernate

- Postgres 13

- Pgpool to manage postgres database connection pooling

System Configurations

- Database server has 1 master and 2 read replicas (Database server is SSD and has 40Cores dedicated on each node). Server specification ->

PowerEdge R740 with 192GB RAM Processor 2*(Intel(R) Xeon(R) Gold 6154 CPU @ 3.00GHz Core Enabled:18 Thread Count: 36) - 3 pgpool instances: manages db connection for all instances of the micro services.

- 7 microservices. Each can scale to a maximum of 10 pods.

- 7 databases. Average size of 5gb. Each micro service has a separate database.

Async configurations for each micro service (manages threads spawning for spring-boot rest endpoints)

@Override

@Bean(name="taskExecutor")

public AsyncTaskExecutor getAsyncExecutor() {

ThreadPoolTaskExecutor threadPoolTaskExecutor = new ThreadPoolTaskExecutor();

threadPoolTaskExecutor.setThreadNamePrefix("Async-");

threadPoolTaskExecutor.setCorePoolSize(3);

threadPoolTaskExecutor.setMaxPoolSize(3);

threadPoolTaskExecutor.setQueueCapacity(600);

threadPoolTaskExecutor.afterPropertiesSet();

return threadPoolTaskExecutor;

}

Hikari Configurations for each micro service

spring.datasource.hikari.maxLifetime : 18000

spring.datasource.hikari.maxPoolSize : 20

spring.datasource.hikari.idleTimeut : 600

spring.datasource.hikari.minimumIdle : 20

spring.datasource.hikari.connectionTimeout : 30000

Pg-pool configurations

PGPOOL_NUM_INIT_CHILDREN: 128

PGPOOL_MAX_POOL: 20

PGPOOL_CHILD_LIFE_TIME:300

PGPOOL_CLIENT_IDLE_LIMIT:600

PGPOOL_CONNECTION_LIFE_TIME:600

PGPOOL_CHILD_MAX_CONNECTIONS:2

PGPOOL_SR_CHECK_PERIOD: 21600

Query Plan

Planning Time: 0.223 ms

Execution Time: 13.816 ms

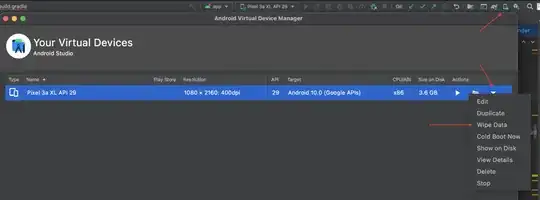

Attached is a screenshot of the new relic image and system architecture

What I have tried

Increased database CPU from 30 cores to 40 cores (however, no matter which value I increase it to, the CPU usage maxes out under high load)

I have tried to update hikari configurations to the default values, but still the issue persists.

spring.datasource.hikari.maxLifetime : 1800000

spring.datasource.hikari.maxPoolSize : 10

spring.datasource.hikari.idleTimeut : 60000

spring.datasource.hikari.minimumIdle : 10

spring.datasource.hikari.connectionTimeout : 30000

I have optimized the queries to only fetch required fields

I have added indexes on the database tables to make queries faster

My Expectations

- Best configurations for hikari and pgpool to eliminate the long time taken to get connection from DB and the "unable to get jdbc exception".

- Suggestions on best springboot configurations in a distributed environment.

- Improvement suggestions on scaling of spring boot microservices