In below example, I am joining two identical tables on two columns:

create table a (id int,txt text);

create table b (id int,txt text);

insert into a select *,* from generate_series(1,40000);

insert into b select *,* from generate_series(1,40000);

analyze a;

analyze b;

explain analyze

select * from a inner join b on a.id = b.id and a.txt = b.txt;

In the explain plan you can see that it underestimates the number of rows that come out of the join by ~40.000. It thinks 1 row comes out instead of 40.000. In my real world example, on which this theoretical example is based on, this is a problem as this gross incorrect estimation on number of rows causes bad execution plans of bigger queries where this join is part of:

Hash Join (... rows=1 ...) (actual ... rows=40000 ...)

So clearly the planner does not know that for each row in table a it will find a row in table b. Clear, how should it? Two fixes come to mind:

(A) Left Join

Using a left join we can correct the estimates:

explain analyze

select * from a LEFT join b on a.id = b.id and a.txt = b.txt;

We can see the estimates are correct now:

Hash Left Join (... rows=40000 ...) (actual ... rows=40000 ...)

(B) Foreign Key

Using a foreign key we can correct the estimates as well:

CREATE UNIQUE INDEX unq_b ON b USING btree (id,txt);

alter table a add constraint fk_a foreign key (id,txt) references b (id,txt);

explain analyze

select * from a inner join b on a.id = b.id and a.txt = b.txt;

We can see the estimates are correct now:

Hash Join (... rows=40000 ...) (actual ... rows=40000 ...)

I neither want to make the join a left join, as I can not guarantee that the query results will then 100% be the same as before in all edge cases. Nor do I want to introduce the FK, as the program does inserts into the tables in a variety of orders and I would have to change the application.

Can you think of other ways to tell the planner about this special relation of these two tables? Maybe a particular way of writing the query? Or some kind of statistics object? Any ideas?

TYVM!

This was tested on two versions:

PostgreSQL 12.9 on x86_64-pc-linux-gnu, compiled by gcc (GCC) 7.3.1 20180712 (Red Hat 7.3.1-12), 64-bit

PostgreSQL 14.6, compiled by Visual C++ build 1914, 64-bit

UPDATE - Example why this misestimation is a problem:

In my real world example it is problematic that postgres thinks that 1 row comes out of the join when in reality 40.000 rows come out. This is because it then decides to do a nested loop of the 1 row (which in reality is 40.000 rows) with a FTS on a big table - so 40.000 FTS on a big table:

create table c (id int,txt text);

insert into c select *,* from generate_series(1,40000000);

analyze c;

SET max_parallel_workers_per_gather = 0;

set join_collapse_limit = 1;

explain

with a_b as (

select a.id a_id,b.id b_id,a.txt a_txt,b.txt b_txt

from a inner join b

on a.id = b.id and a.txt = b.txt

)

select * from a_b inner join c

on a_b.a_id = c.id and a_b.b_txt = c.txt and a_b.b_id = c.id and a_b.a_txt = c.id::text;

Which is 40.000 FTS of table c:

QUERY PLAN |

-----------------------------------------------------------------------+

Nested Loop (cost=1216.00..921352.51 rows=1 width=30) |

Join Filter: ((a.id = c.id) AND (a.txt = c.txt)) |

-> Hash Join (cost=1216.00..2132.01 rows=1 width=18) |

Hash Cond: ((a.id = b.id) AND (a.txt = b.txt)) |

-> Seq Scan on a (cost=0.00..616.00 rows=40000 width=9) |

-> Hash (cost=616.00..616.00 rows=40000 width=9) |

-> Seq Scan on b (cost=0.00..616.00 rows=40000 width=9)|

-> Seq Scan on c (cost=0.00..916220.48 rows=200001 width=12) |

Filter: (txt = (id)::text) |

Interestingly the left join trick does not even work here, only the FK corrects the estimations and therefore the plan:

/* left join trick not working*/

explain

with a_b as (

select a.id a_id,b.id b_id,a.txt a_txt,b.txt b_txt

from a LEFT join b

on a.id = b.id and a.txt = b.txt

)

select * from a_b inner join c

on a_b.a_id = c.id and a_b.b_txt = c.txt and a_b.b_id = c.id and a_b.a_txt = c.id::text;

/*QUERY PLAN |

-----------------------------------------------------------------------+

Nested Loop (cost=1216.00..921352.51 rows=1 width=30) |

Join Filter: ((a.id = c.id) AND (a.txt = c.txt)) |

-> Hash Join (cost=1216.00..2132.01 rows=1 width=18) |

Hash Cond: ((a.id = b.id) AND (a.txt = b.txt)) |

-> Seq Scan on a (cost=0.00..616.00 rows=40000 width=9) |

-> Hash (cost=616.00..616.00 rows=40000 width=9) |

-> Seq Scan on b (cost=0.00..616.00 rows=40000 width=9)|

-> Seq Scan on c (cost=0.00..916220.48 rows=200001 width=12) |

Filter: (txt = (id)::text) |*/

/* with the FK the plan is correct */

CREATE UNIQUE INDEX unq_b ON b USING btree (id,txt);

alter table a add constraint fk_a foreign key (id,txt) references b (id,txt);

explain

with a_b as (

select a.id a_id,b.id b_id,a.txt a_txt,b.txt b_txt

from a join b

on a.id = b.id and a.txt = b.txt

)

select * from a_b inner join c

on a_b.a_id = c.id and a_b.b_txt = c.txt and a_b.b_id = c.id and a_b.a_txt = c.id::text;

/*QUERY PLAN |

-----------------------------------------------------------------------------+

Hash Join (cost=2642.00..920362.50 rows=1 width=30) |

Hash Cond: ((c.id = a.id) AND (c.txt = a.txt)) |

-> Seq Scan on c (cost=0.00..916220.48 rows=200001 width=12) |

Filter: (txt = (id)::text) |

-> Hash (cost=2042.00..2042.00 rows=40000 width=18) |

-> Hash Join (cost=1216.00..2042.00 rows=40000 width=18) |

Hash Cond: ((a.id = b.id) AND (a.txt = b.txt)) |

-> Seq Scan on a (cost=0.00..616.00 rows=40000 width=9) |

-> Hash (cost=616.00..616.00 rows=40000 width=9) |

-> Seq Scan on b (cost=0.00..616.00 rows=40000 width=9)|*/

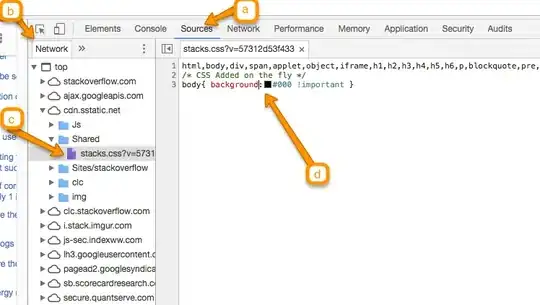

Screenshot of the execution plan of the real world example that this sample is based on (green arrows show the problem). Note that the real world example has the 1:1 problem 2 times in a row (2 FKs would solve it here):