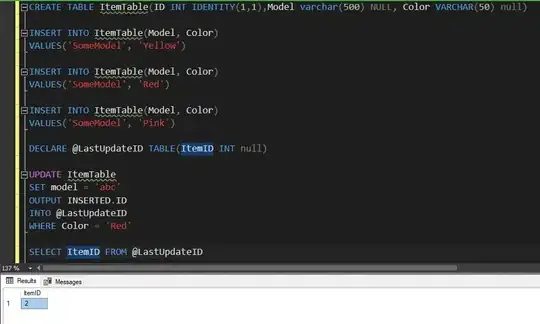

We have an Azure Data Factory dataflow, it will sink into Delta. We have Owerwrite, Allow Insert options set and Vacuum = 1. When we run the pipeline over and over with no change in the table structure pipeline is successfull. But when the table structure being sinked changed, ex data types changed and such the pipeline fails with below error.

Error code: DFExecutorUserError Failure type: User configuration issue

Details: Job failed due to reason: at Sink 'ConvertToDelta': Job aborted.

We tried setting Vacuum to 0 and back, Merge Schema set and now, instead of Overwrite Truncate and back and forth, pipeline still failed.