I'm tryting to recognize lego bricks from video cam using opencv. It performs extremely bad comparing with just running detect.py in Yolov5. Thus I made some experiments about just recognizing images, and I found using openCV still performs dramatically bad as well, is there any clue? Here are the experiments I did.

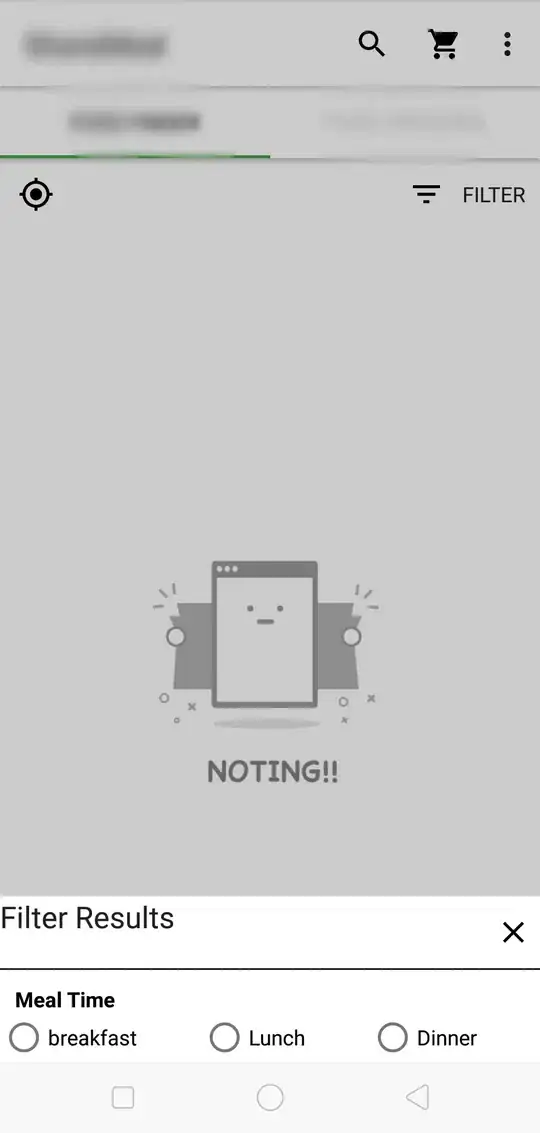

This is the result from detect.py by just running

python detect.py --weights runs/train/yolo/weights/best.pt --source legos.jpg

This is the result from openCV by implementing this

import torch

import cv2

import numpy as np

model = torch.hub.load('.', 'custom', path='runs/train/yolo/weights/last.pt', source='local')

cap = cv2.VideoCapture('legos.jpg')

while cap.isOpened():

ret, frame = cap.read()

# Make detections

results = model(frame)

cv2.imshow('YOLO', np.squeeze(results.render()))

if cv2.waitKey(0) & 0xFF == ord('q'):

break

cap.release()

cv2.destroyAllWindows()

If I simply do this, it gives a pretty good result

import torch

results = model('legos.jpg')

results.show()

Any genious ideas?