I would like to use a decision tree as proposed by Yan et al. 2004; Adaptive Testing With Regression Trees in the Presence of Multidimensionality (https://journals.sagepub.com/doi/epdf/10.3102/10769986029003293) That produce trees like this:

It also seems like a similar (same?) thing was proposed more recently under the name decision stream https://arxiv.org/pdf/1704.07657.pdf

The goal is to perform the usual splitting as in CART or a similar decision tree at each node but then merge nodes where the difference in the target variable between nodes is smaller than some prespecified value.

I didn't find a package that can do this (I think SAS might be able to do it, and there is the decision stream implementation in closure). I looked into partykit package with the hope that I might change it a bit to produce this behavior, but the main issues are that the tree is constructed recursively, so the nodes don't know about other nodes at the same level, and that the tree representation does not allow to point to other nodes that are already in the tree, so a node can only have one parent, but I would need more. I was also thinking about repeatedly fitting tree stumps, then merging nodes and repeat, but I don't know how I would make predictions with something like that.

Edit: some example code

set.seed(1)

n_subjects <- 100

n_items <- 4

responses <- matrix(rep(c(0,1),times=(n_subjects/2)*n_items),

ncol=n_items)

responses <- as.data.frame(apply(responses, 2, function(x) sample(x)))

weights <- c(20,20,20,10)

responses$outcome <- rowSums(responses[,1:n_items] * weights)

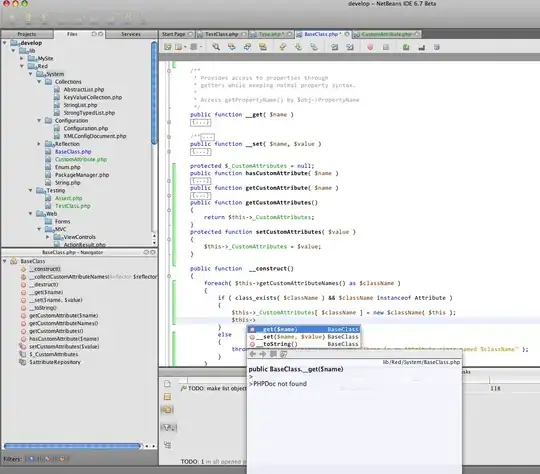

library(rattle)

tree <- rpart(outcome~., data=responses)

fancyRpartPlot(tree, tweak=1.2, sub='')

I would expect nodes 5 and 6 to get merged because the outcome value is basically the same (34 and 35), before the splitting continues from the merged node