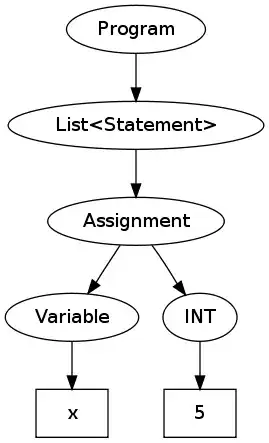

I've setup a DataFactory v2 pipeline with one data bricks notebook activity. The data bricks notebook creates an SQL cursor, mounts the storage account and rejects or stages files(or blobs) that enter into the storage account path that has been set. The infrastructure of the project is shown below:-

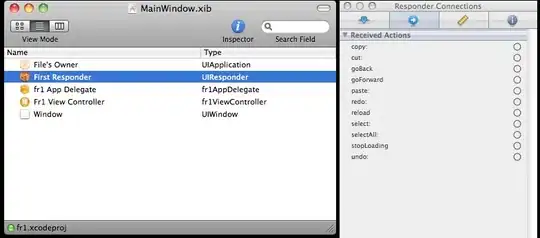

I had initially set a trigger that once a files had been uploaded into the blob storage, the pipeline is triggered, which consists of running the databricks notebook, however I found that the pipeline did not trigger. I then attempted to debug the databrick activity on ADF with the default value for the pipeline not-defined parameter, but it came up with an error. However, when I specified the default value for the pipeline parameter (Product.csv), the pipeline ran perfectly.

I had initially set the pipeline parameter using fileName and folderPath leaving the default value empty for both but the pipeline comes up with an error.

I have included the following parameters within my pipeline:-

When fileName is defined with the csv name the pipeline works. I believe I should be able to leave the default value empty and that with the parameters defined that the blob/folder name is accessed and passed through the pipeline, which is also picked up from Databricks when executing the notebook. Unsure what I may be doing wrong and would appreciate the assistance!

I would aslo like to know how to ensure that once a file enters blob storage, the pipeline built is triggered