According to the documenation of the function train the input x can be:

a data frame containing training data where samples are in rows and

features are in columns.

And y the target input:

A numeric or factor vector containing the outcome for each sample.

So you could use numerical and factor variables. Using the ~. notation, you will cover all variables in x. Preprocessing numerical variables with center and scale is indeed a good approach. In the train function you can use the preProcess argument to scale and center. This will ignore your factor variables according to the documentation:

a matrix or data frame. Non-numeric predictors are allowed but will be

ignored.

On page 550 of the book Applied Predictive Modeling (Authors Max Kuhn, Kjell Johnson), you can find what pre-processing methods are suggested for Linear regression. It says:

- Center and scaling (CS)

- Remove near-zero predictors (NZV)

- Remove highly correlated predictors (Corr)

An option to handle factor variables in preprocessing is using dummy variables. In caret you can use the function dummyVars to convert the factor variables to a numeric variable.

example code:

library(caret)

library(tibble)

# Data

data("mtcars")

mydata = mtcars[, -c(8,9)]

set.seed(100)

mydata$dir = sample(x=c("N", "E", "S", "W"), size = 32, replace = T)

mydata$dir = as.factor(mydata$dir)

class(mydata$dir) # Factor with four levels

#> [1] "factor"

# Create dummy variables

dummy_mydata <- dummyVars(hp~., data = mydata)

dummy_mydata_updated <- as_tibble(predict(dummy_mydata, newdata = mydata))

# remember to include the outcome variable too

dummy_mydata_updated <- cbind(hp = mydata$hp, dummy_mydata_updated)

head(dummy_mydata_updated)

#> hp mpg cyl disp drat wt qsec gear carb dir.E dir.N dir.S dir.W

#> 1 110 21.0 6 160 3.90 2.620 16.46 4 4 1 0 0 0

#> 2 110 21.0 6 160 3.90 2.875 17.02 4 4 0 0 1 0

#> 3 93 22.8 4 108 3.85 2.320 18.61 4 1 1 0 0 0

#> 4 110 21.4 6 258 3.08 3.215 19.44 3 1 0 0 0 1

#> 5 175 18.7 8 360 3.15 3.440 17.02 3 2 0 0 1 0

#> 6 105 18.1 6 225 2.76 3.460 20.22 3 1 0 1 0 0

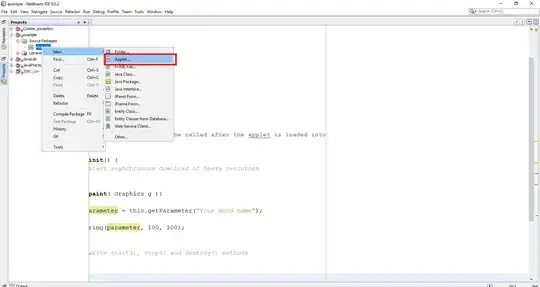

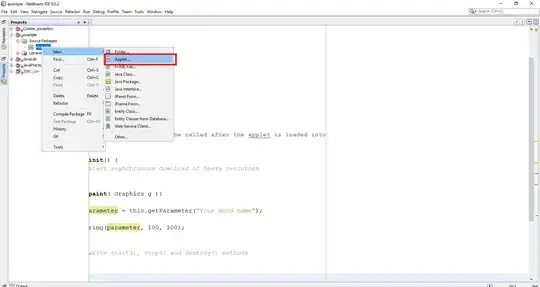

MyControl = trainControl(

method = "repeatedcv",

number = 5,

repeats = 2,

verboseIter = TRUE,

savePredictions = "final"

)

model_glm <- train(

hp ~ .,

data = mydata,

method = "glm",

metric = "RMSE",

preProcess = c('center', 'scale'),

trControl = MyControl

)

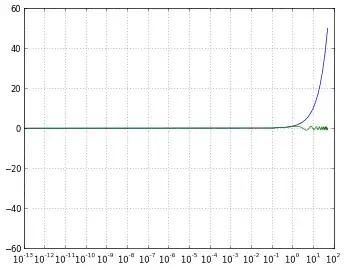

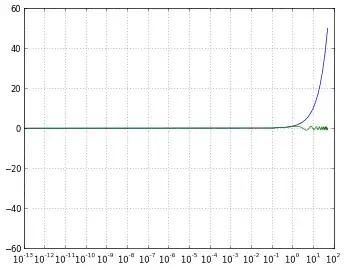

plot(model_glm$pred$pred, model_glm$pred$obs,

xlab='Predicted Values',

ylab='Actual Values',

main='Predicted vs. Actual Values')

abline(a=0, b=1)

model_glm_dummy <- train(

hp ~ .,

data = dummy_mydata_updated,

method = "glm",

metric = "RMSE",

preProcess = c('center', 'scale'),

trControl = MyControl

)

plot(model_glm_dummy$pred$pred, model_glm_dummy$pred$obs,

xlab='Predicted Values',

ylab='Actual Values',

main='Predicted vs. Actual Values')

abline(a=0, b=1)

# Results

model_glm$results

#> parameter RMSE Rsquared MAE RMSESD RsquaredSD MAESD

#> 1 none 37.13849 0.7302309 32.09739 11.50226 0.2143993 10.10452

model_glm_dummy$results

#> parameter RMSE Rsquared MAE RMSESD RsquaredSD MAESD

#> 1 none 35.71861 0.8095385 29.678 8.959409 0.1193792 5.616908

Created on 2022-10-23 with reprex v2.0.2

As you can see there is a slightly difference in results between the two models.

For a really usefull source check this website