Great Expectations creates temporary tables. I tried profiling data in my Snowflake lab. It worked because the role I was using could create tables in the schema that contained the tables I was profiling.

I tried to profile a table in a Snowflake share, where we can't create objects, and it failed:

(snowflake.connector.errors.ProgrammingError) 002003 (02000): SQL compilation error:

Schema 'OUR_DATABASE.SNOWFLAKE_SHARE_SCHEMA' does not exist or not authorized.

[SQL: CREATE OR REPLACE TEMPORARY TABLE ge_temp_3eb6c50b AS SELECT *

FROM "SNOWFLAKE_SHARE_SCHEMA"."INTERESTING_TABLE"

WHERE true]

(Background on this error at: https://sqlalche.me/e/14/f405)

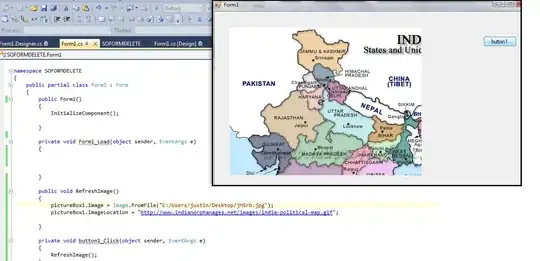

Here's the output from the CLI:

% great_expectations suite new

Using v3 (Batch Request) API

How would you like to create your Expectation Suite?

1. Manually, without interacting with a sample batch of data (default)

2. Interactively, with a sample batch of data

3. Automatically, using a profiler

: 3

A batch of data is required to edit the suite - let's help you to specify it.

Select data_connector

1. default_runtime_data_connector_name

2. default_inferred_data_connector_name

3. default_configured_data_connector_name

: 3

Which data asset (accessible by data connector "default_configured_data_connector_name") would you like to use?

1. INTERESTING_TABLE

Type [n] to see the next page or [p] for the previous. When you're ready to select an asset, enter the index.

: 1

Name the new Expectation Suite [INTERESTING_TABLE.warning]:

Great Expectations will create a notebook, containing code cells that select from available columns in your dataset and

generate expectations about them to demonstrate some examples of assertions you can make about your data.

When you run this notebook, Great Expectations will store these expectations in a new Expectation Suite "INTERESTING_TABLE.warning" here:

file:///path/to-my-repo/great_expectations/expectations/INTERESTING_TABLE/warning.json

Would you like to proceed? [Y/n]: Y

Here's the datasources section from great_expectations.yml:

datasources:

our_snowflake:

class_name: Datasource

module_name: great_expectations.datasource

execution_engine:

module_name: great_expectations.execution_engine

credentials:

host: xyz92716.us-east-1

username: MYUSER

query:

schema: MYSCHEMA

warehouse: MY_WAREHOUSE

role: RW_ROLE

password: password1234

drivername: snowflake

class_name: SqlAlchemyExecutionEngine

data_connectors:

default_runtime_data_connector_name:

class_name: RuntimeDataConnector

batch_identifiers:

- default_identifier_name

module_name: great_expectations.datasource.data_connector

default_inferred_data_connector_name:

include_schema_name: true

class_name: InferredAssetSqlDataConnector

introspection_directives:

schema_name: SNOWFLAKE_SHARE_SCHEMA

module_name: great_expectations.datasource.data_connector

default_configured_data_connector_name:

assets:

INTERESTING_TABLE:

schema_name: SNOWFLAKE_SHARE_SCHEMA

class_name: Asset

module_name: great_expectations.datasource.data_connector.asset

class_name: ConfiguredAssetSqlDataConnector

module_name: great_expectations.datasource.data_connector

How can I tweak great_expectations.yml so that temporary objects are created in a separate database and schema from the datasource?

As a workaround, we created a view in the schema with read/write that points to the data in the read-only share. That adds an extra step. I'm hoping there's a simple config to create temporary objects outside the schema being profiled.