I have a job which I am interested in tracking the latency of with Prometheus, with source values like this:

timestamp | latency

-------------------

0 | 15ms

1 | 20ms

2 | 18ms

5 | 22ms

6 | 30ms

8 | 5ms

Currently I use a CloudWatch Insights query to get some statistics for this latency data. For example, for the above data, I can calculate the min, max, average, and standard deviations over the last 5 minutes:

| stats avg(interval), stddev(interval), min(interval), max(interval) by bin(5m)

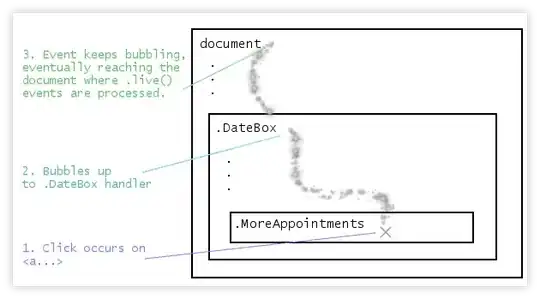

And this gives me a nice little graph like this:

In Prometheus, currently I am using 2 counters:

- duration_seconds_total

- duration_count_total

Which allows me to calculate the average latency over 5 minutes:

rate(duration_seconds_total[5m]) / rate(duration_count_total[5m])

But what about max, min, standard deviation?

I know I could do this with a Gauge and max/avg/min_over_time but this would lose fidelity if I'm getting requests more often than my scrape interval.

Is the correct approach here to use a Histogram / Summary? Median then would be roughly p50 (assuming good bucket choices) but how would I calculate min, max, or standard deviation? Is this possible using Prometheus?