I'm writing code to find median orders of tournaments, given a tournament T with n vertices, a median order is an ordering of the vertices of T such that it maximices the number of edges pointing in the 'increasing' direction with respect to the ordering.

In particular if the vertex set of T is {0,...,n-1}, the following integer problem (maximizing over the set of permutations) yields an optimal answer to the problem where Q is also a boolean n by n matrix.

I've implemented a linealization of this problem, noting that Q is a permutation, the following python code works, but my computer can't handle graphs that are as small as 10 vertices, where i would expect to have fast answers, is there any relatively easy way to speed up this computation?.

import numpy as np

from pyscipopt import Model,quicksum

from networkx.algorithms.tournament import random_tournament as rt

import math

# Some utilities to define the adjacency matrix of an oriented graph

def adjacency_matrix(t,order):

n = len(order)

adj_t = np.zeros((n,n))

for e in t.edges:

adj_t[order.index(e[0]),order.index(e[1])] = 1

return adj_t

# Random tournaments to instanciate the problem

def random_tournament(n):

r_t = rt(n)

adj_t = adjacency_matrix(r_t,list(range(n)))

return r_t, adj_t

###############################################################

############# PySCIPOpt optimization Routine ##################

###############################################################

n = 5 # some arbitrary size parameter

t,adj_t = random_tournament(n)

model = Model()

p,w,r = {},{},{}

# Defining model variables and weights

for k in range(n):

for l in range(n):

p[k,l] = model.addVar(vtype='B')

for i in range(n):

for j in range(i,n):

r[i,k,l,j] = model.addVar(vtype='C')

w[i,k,l,j] = adj_t[k][l]

for i in range(n):

# Forcing p to be a permutation

model.addCons(quicksum(p[s,i] for s in range(n))==1)

model.addCons(quicksum(p[i,s] for s in range(n))==1)

for k in range(n):

for j in range(i,n):

for l in range(n):

# Setting r[i,k,l,j] = min(p[i,k],p[l,j])

model.addCons(r[i,k,l,j] <= p[k,i])

model.addCons(r[i,k,l,j] <= p[l,j])

# Set the objective function

model.setObjective(quicksum(r[i,k,l,j]*w[i,k,l,j] for i in range(n) for j in range(i,n) for k in range(n) for l in range(n)), "maximize")

model.data = p,r

model.optimize()

sol = model.getBestSol()

# Print the solution on a readable format

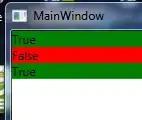

Q = np.array([math.floor(model.getVal(model.data[0][key])) for key in model.data[0].keys()]).reshape([n,n])

print(f'\nOptimization ended with status {model.getStatus()} in {"{:.2f}".format(end_optimization-end_setup)}s, with {model.getObjVal()} increasing edges and optimal solution:')

print('\n',Q)

order = [int(x) for x in list(np.matmul(Q.T,np.array(range(n))))]

new_adj_t = adjacency_matrix(t,order)

print(f'\nwhich induces the ordering:\n\n {order}')

print(f'\nand induces the following adjacency matrix: \n\n {new_adj_t}')

Right now I've run it for n=5 taking between 5 and 20 seconds, and have ran it succesfully for small integers 6,7 with not much change in time needed.

For n=10, on the other hand, it has been running for around an hour with no solution yet, I suppose the linearization having O(n**4) variables hurts, but I don't understand why it blows up so fast. Is this normal? How would a better implementation be in case there is one?.