I am loading the delta tables into S3 delta lake. the table schema is product_code,date,quantity,crt_dt.

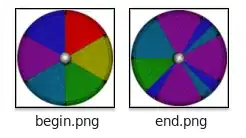

i am getting 6 months of Forecast data, for example if this month is May 2022, i will get May, June, July, Aug, Sept, Oct quantities data. What is the issue i am facing here is the data is getting duplicated every month. i want only a single row in the delta table based on the recent crt_dt as shown in below screenshot. Can anyone help me with the solution i should implement?

The data is partitioned by crt_dt.

Thanks!